Getting Started with Crew.ai: Building Crews in Python

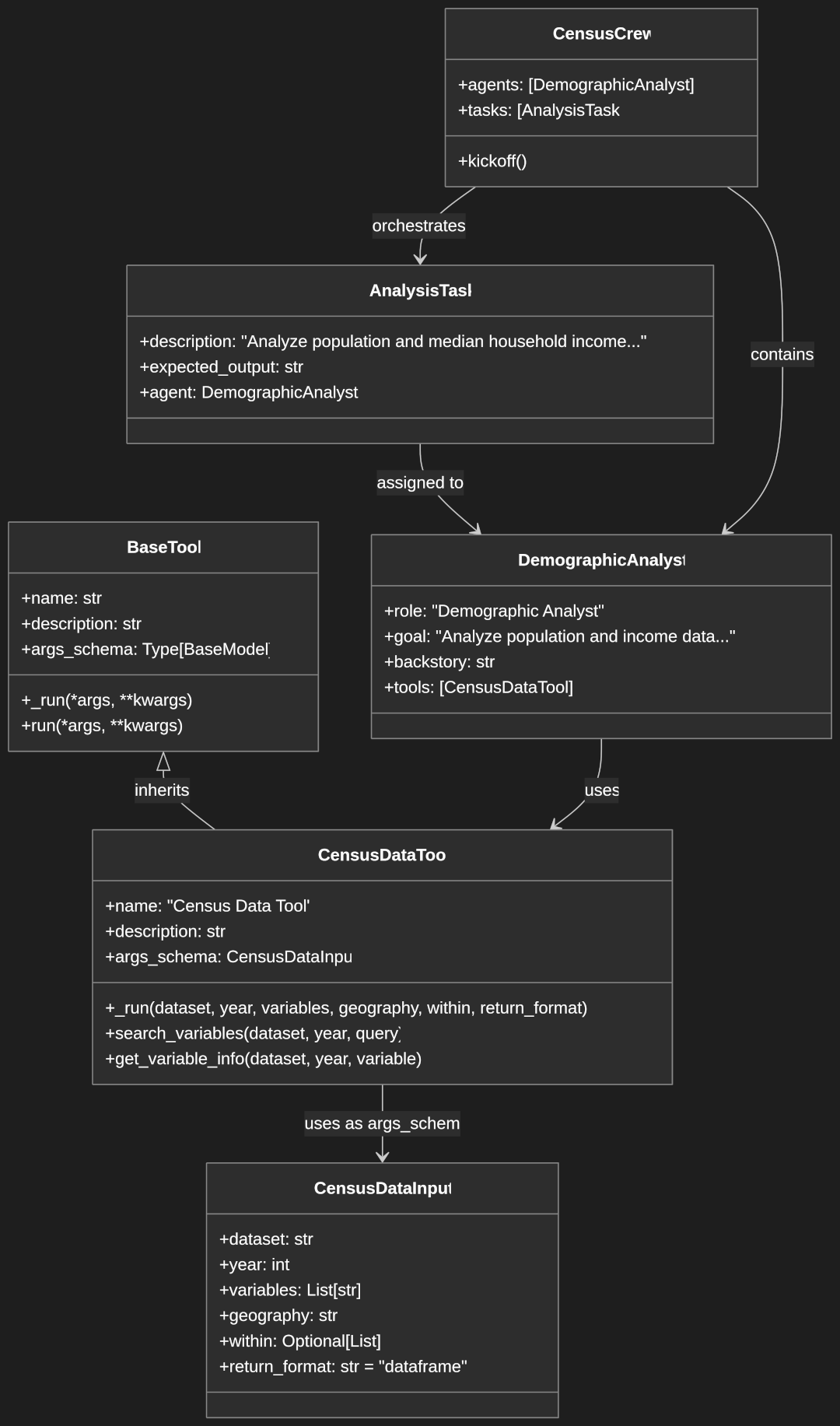

What is Crew.ai? At its core, Crew.ai is an innovative open-source framework designed for orchestrating role-based autonomous AI agents. This powerful system enables the creation and management of collaborative AI teams that work together seamlessly to accomplish complex tasks.

Why use AI agent orchestration? In today’s rapidly evolving technological landscape, single-purpose AI solutions often fall short when facing complex, multi-faceted challenges. Crew.ai addresses this limitation by enabling multiple AI agents to collaborate, each bringing specialized capabilities to the table.

Key benefits and capabilities include:

- Streamlined task delegation and management

- Efficient collaboration between specialized AI agents

- Scalable architecture for handling complex workflows

- Integration with leading Large Language Models (LLMs)

Understanding Crew.ai Architecture

Core Concepts

The agent framework overview reveals Crew.ai’s foundation as a Python-based system built atop various LLMs. The role-based architecture allows for precise assignment of responsibilities, while LLM integration provides the intelligence backbone for each agent.

Key Components

Individual agents serve as the basic building blocks of the system, each capable of independent operation while maintaining the ability to collaborate. The crew structure provides the organizational framework, with manager agents overseeing operations and coordinating efforts.

Memory systems within Crew.ai enable both short-term and long-term retention of information, ensuring continuity and context preservation across interactions.

Setting Up Crew.ai

Installation Requirements

Getting started with Crew.ai requires:

- A Python environment (3.7 or higher recommended)

- Necessary dependencies management

- Package installation via uv, for uv installation and usage read our guide here:

# Install UV if you don't already have it

curl -LsSf https://astral.sh/uv/install.sh | sh

# Install crewai with UV

uv tool install crewaiThis simple installation command installs three essential packages:

crewai: The core framework for agent orchestrationlangchain: Provides foundational LLM interaction capabilitieslangchain-community: Includes additional tools and integrations with third-party services

Basic Configuration

Initial setup involves creating your first agent and crew:

from crewai import Crew, Agent

# Create agents

agent1 = Agent(role="researcher")

agent2 = Agent(role="writer")

# Create crew

crew = Crew(

agents=[agent1, agent2],

manager_llm="gpt-4"

)

This basic setup demonstrates:

- Importing the essential classes from the Crew.ai framework

- Creating two agents with distinct roles (researcher and writer)

- Assembling these agents into a crew structure

- Setting GPT-4 as the manager LLM that will coordinate between agents

- Note that this minimal example leaves out many optional parameters that would be needed in production use cases

Creating and Managing Agents

Agent Configuration

Agent configuration involves defining specific roles, assigning capabilities, and integrating necessary tools. Each agent can be customized to handle particular tasks within the larger system.

Memory Management

Crew.ai’s sophisticated memory system includes:

- Short-term memory for immediate task context

- Long-term memory for persistent knowledge

- Shared knowledge bases for team-wide information access

Code Example: Creating Custom Agents

from crewai import Agent

custom_agent = Agent(

role="specialist",

tools=[custom_tool1, custom_tool2],

memory_config={

"type": "long_term",

"capacity": 1000

}

)

This example shows how to create an agent with:

- A defined specialist role that shapes the agent’s approach to tasks

- Custom tools that extend the agent’s capabilities (these would be defined elsewhere in your code using the

@tooldecorator) - Memory configuration specifying:

- Long-term memory persistence across interactions

- A capacity limit of 1000 entries to manage resource usage

- A complete agent would typically include additional parameters like

goalandbackstory

Building a Crew

Crew Structure Setup

Creating an effective crew requires:

- Configuring a manager agent to oversee operations

- Organizing agents in a logical hierarchy

- Establishing clear communication protocols

Example: Research and Content Creation Crew

from crewai import Agent, Crew, Task, Process, LLM

from langchain_community.tools import DuckDuckGoSearchRun

from crewai.tools import tool

# Configure LLM

llm = LLM(

model="gpt-4", # Alternative: 'anthropic/claude-3-sonnet-20240229'

temperature=0.7, # Controls creativity vs determinism

timeout=120 # Maximum response time in seconds

)

# Create internet search tool

@tool

def search_internet(query: str) -> str:

"""Search the internet for information about a topic."""

search_tool = DuckDuckGoSearchRun()

return search_tool.run(query)

# Create specialized agents with clear roles

researcher = Agent(

role="Research Specialist",

goal="Find comprehensive information on AI topics",

backstory="You're an expert researcher with access to vast information sources",

verbose=True, # Enables detailed logging

llm=llm,

tools=[search_internet] # Only researcher needs search capabilities

)

writer = Agent(

role="Content Writer",

goal="Create engaging, accurate content based on research",

backstory="You're a skilled writer specializing in technical topics",

verbose=True,

llm=llm

)

editor = Agent(

role="Content Editor",

goal="Polish and refine content to ensure quality and accuracy",

backstory="You're a meticulous editor with an eye for detail",

verbose=True,

llm=llm

)

# Define sequential tasks with clear inputs/outputs

research_task = Task(

description="Research the latest developments in AI agent orchestration",

expected_output="A comprehensive research document with key findings",

agent=researcher

)

writing_task = Task(

description="Write an article based on the research findings",

expected_output="A well-structured 1500-word article on AI agent orchestration",

agent=writer

)

editing_task = Task(

description="Edit and improve the article for clarity and accuracy",

expected_output="A polished final article ready for publication",

agent=editor

)

# Create crew with sequential process flow

content_crew = Crew(

agents=[researcher, writer, editor],

tasks=[research_task, writing_task, editing_task],

verbose=True,

process=Process.sequential # Tasks run in order, output feeds into next task

)

# Execute workflow and capture results

print("\n=== STARTING AI AGENT ORCHESTRATION ARTICLE CREATION ===\n")

result = content_crew.kickoff()

# Access individual task outputs

print("\n=== RESEARCH OUTPUT ===\n")

research_output = research_task.output.raw if hasattr(research_task, 'output') else "Research output not available"

print(research_output)

print("\n=== ARTICLE DRAFT ===\n")

writing_output = writing_task.output.raw if hasattr(writing_task, 'output') else "Writing output not available"

print(writing_output)

print("\n=== FINAL EDITED ARTICLE ===\n")

final_output = editing_task.output.raw if hasattr(editing_task, 'output') else "Editing output not available"

print(final_output)

This code block demonstrates a comprehensive content creation workflow using Crew.ai’s native components:

- Native LLM Configuration:

- Uses Crew.ai’s built-in

LLMclass for direct model integration - Configures GPT-4 with precise temperature and timeout parameters

- The temperature value of 0.7 balances creativity and determinism

- Timeout setting prevents excessive processing time (120 seconds)

- Uses Crew.ai’s built-in

- Agent Creation with Clear Responsibilities:

- Each agent has a specific role, goal, and backstory to shape their behavior

- The

verbose=Trueparameter enables detailed logging of agent activities - Three specialized agents form a complete content pipeline:

- Researcher: Equipped with search tools to gather information

- Writer: Transforms research into structured content

- Editor: Refines and improves the final output

- Proper Tool Creation and Integration:

- Uses the

@tooldecorator to properly wrap external tools for agent use - The search_internet tool instantiates DuckDuckGoSearchRun

- Only assigned to the researcher agent who needs external information

- Demonstrates best practices for tool integration in Crew.ai

- Uses the

- Well-Defined Task Structure:

- Each task includes a clear description and expected output format

- Tasks are assigned to specific agents based on their expertise

- The sequence forms a logical workflow from research to writing to editing

- Explicit Results Processing:

- Captures outputs at each stage of the process with error handling

- Prints each stage’s results separately for review and debugging

- Demonstrates how to access the raw output from each task

- Sequential Process Configuration:

process=Process.sequentialensures tasks run in order- The research output becomes input for the writer, which then feeds the editor

- This maintains information flow through the content creation pipeline

- User Feedback During Execution:

- Clear console messages indicate what’s happening at each stage

- Progress indicators help users understand the workflow

- Structured output formatting makes results easy to interpret

This implementation showcases a complete end-to-end content production workflow where each agent performs a specialized function, with outputs flowing naturally from one stage to the next, resulting in a polished final article.

Practical Application

Problem-Solving Scenarios

For this example you’ll need to have pandas, matplotlib, and seaborn installed. The LLM will be generating python to create charts and it could use either matplotlib or seaborn from prior experience.

pip install pandas matplotlib seaborn

The PythonREPL tool is part of the langchain-experimental package:

pip install langchain-experimental

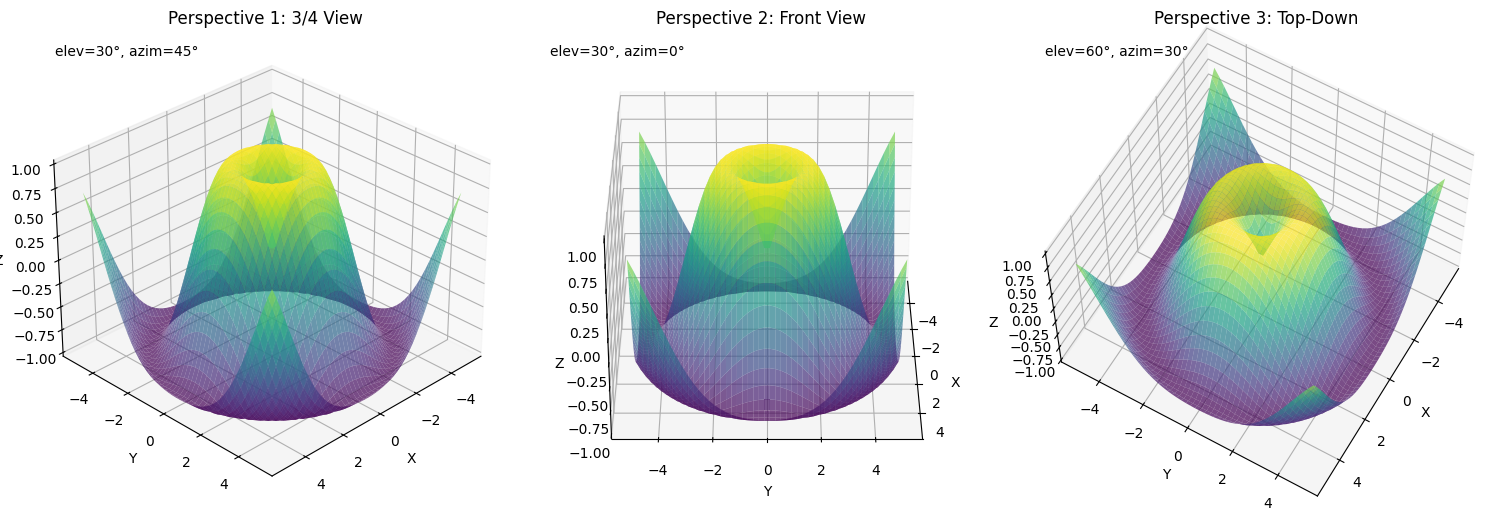

Example: Complex Data Analysis Project

from crewai import Agent, Task, Crew, LLM

import pandas as pd

from langchain_experimental.tools import PythonREPLTool

from crewai.tools import tool

# Configure LLM

llm = LLM(model="gpt-4", temperature=0.7)

# Create Python execution tool

@tool

def run_python(code: str) -> str:

"""Execute Python code and return the result."""

python_tool = PythonREPLTool()

return python_tool.run(code)

# Create specialized data agents

data_engineer = Agent(

role="Data Engineer",

goal="Prepare and clean data for analysis",

backstory="You're an expert at data preparation and cleaning",

tools=[run_python],

verbose=True

)

data_analyst = Agent(

role="Data Analyst",

goal="Analyze data to extract meaningful insights",

backstory="You're skilled at finding patterns and insights in data",

tools=[run_python],

verbose=True

)

data_visualizer = Agent(

role="Data Visualization Specialist",

goal="Create clear visualizations of analysis results",

backstory="You transform complex data into understandable visuals",

tools=[run_python],

verbose=True

)

# Create data pipeline tasks

data_prep_task = Task(

description="Clean and prepare the sales dataset for analysis",

expected_output="A cleaned dataset ready for analysis",

agent=data_engineer

)

analysis_task = Task(

description="Analyze sales trends and identify key factors affecting performance",

expected_output="A detailed analysis report with key findings",

agent=data_analyst,

context=[data_prep_task] # This passes the output of data_prep_task as context

)

visualization_task = Task(

description="Create visualizations showing the main insights from the analysis",

expected_output="A set of charts and graphs illustrating key findings",

agent=data_visualizer,

context=[analysis_task] # This passes the output of analysis_task as context

)

# Create and run the data analysis crew

data_crew = Crew(

agents=[data_engineer, data_analyst, data_visualizer],

tasks=[data_prep_task, analysis_task, visualization_task],

verbose=True # Enable detailed logging

)

result = data_crew.kickoff()

print(result)

This data analysis implementation showcases a sophisticated analytical pipeline using Crew.ai’s framework:

- Python Execution Environment Integration:

- Implements the

run_pythontool using the@tooldecorator to properly wrap LangChain’s PythonREPLTool - This critical capability allows agents to dynamically generate and execute Python code for data manipulation

- Provides a secure sandbox for executing data science operations without direct file system access

- Enables real-time computation and data transformation capabilities for all agents

- Implements the

- Specialized Data Science Agent Hierarchy:

- Creates three distinct agents with complementary data science capabilities:

- Data Engineer: Focuses on data cleaning, normalization, and preparation

- Data Analyst: Specializes in statistical analysis and insight extraction

- Data Visualization Specialist: Expert in converting analytical findings into visual representations

- Each agent receives the Python execution tool, allowing them to write and run code appropriate to their role

- All agents are configured with

verbose=Trueto provide visibility into their reasoning and actions - Backstories shape each agent’s approach to their specialized data tasks

- Creates three distinct agents with complementary data science capabilities:

- Progressive Data Pipeline Architecture:

- Implements a three-stage analytical workflow that mirrors professional data science practices:

- Stage 1: Data preparation ensures quality and accessibility of information

- Stage 2: Analysis extracts patterns and insights from the prepared data

- Stage 3: Visualization translates complex findings into understandable graphics

- Each stage builds upon the previous one, with explicit dependencies

- Implements a three-stage analytical workflow that mirrors professional data science practices:

- Contextual Task Chaining Mechanism:

- Uses the

contextparameter to pass outputs between pipeline stages:- Analysis task receives the cleaned dataset from the preparation task

- Visualization task receives the analytical findings from the analysis task

- This ensures each agent has access to the necessary information from previous steps

- The commented optional context parameter shows how you could provide additional guidance when needed

- Uses the

- Centralized Crew Management:

- The

data_crewobject orchestrates the entire analytical workflow - Sequential task execution ensures proper order of operations

- Verbose logging provides visibility into the entire process

- Single kickoff command initiates the complete analytical pipeline

- The

- Flexible Implementation Structure:

- The architecture allows for easy adaptation to different analytical needs

- Additional agents or tasks could be inserted for more complex workflows

- The pattern would work equally well for financial analysis, marketing data, scientific research, etc.

- Tool assignments could be customized for each agent’s specific requirements

- Error Handling and Output Capture:

- The final result variable captures the complete workflow output

- Structured print statement makes the final result accessible for further use

- The design pattern allows for output inspection at each stage of the process

- Developers could easily extend this with more sophisticated error handling

This implementation demonstrates how Crew.ai can orchestrate a complete data science workflow where specialized agents handle different phases of analysis, building upon each other’s work to produce a comprehensive analytical result with accompanying visualizations.

Best Practices and Optimization

Agent Design Principles

Effective agent design is critical for optimal Crew.ai performance. Consider these principles:

Clear role specification: Each agent should have a well-defined domain of expertise with minimal overlap between agents. When roles are ambiguous, agents may struggle with task boundaries or produce redundant work.

from crewai import Agent

# Good role definition

research_agent = Agent(

role="Financial Data Researcher",

goal="Discover and analyze financial market trends with emphasis on tech stocks",

backstory="You are a financial analyst with 15 years of experience in tech sector analysis"

)

# Instead of vague roles like:

# research_agent = Agent(role="Researcher", goal="Do research")

This example demonstrates:

- Domain-Specific Role: “Financial Data Researcher” clearly defines the agent’s specialty

- Concrete Goals: Combines both actions (“discover and analyze”) and specific subject matter

- Detailed Backstory: Provides context that shapes the agent’s expertise and approach

- Contrast with Poor Practice: Shows how vague definitions lead to inconsistent performance

Appropriate skill assignment: Match agent capabilities with their intended tasks by providing relevant tools and knowledge bases. Consider the tradeoff between generalist and specialist agents:

- Specialist agents excel at narrow, well-defined tasks but may struggle with unexpected scenarios

- Generalist agents handle diverse situations better but might lack depth in specific domains

Robust error handling mechanisms: Implement comprehensive error management to gracefully handle various failure modes:

from crewai import Agent, Task, Crew, Process

import logging

import time

import random

from crewai.tools import tool

# Setup logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("crew_ai")

# Define a tool that might fail

@tool

def fetch_data(query: str) -> str:

"""Fetch data that might occasionally fail."""

# Simulate occasional failures

if random.random() < 0.3: # 30% chance of failure

raise Exception("Simulated data fetch error: API unavailable")

# Simulate occasional low-quality results

if random.random() < 0.3: # 30% chance of low-quality result

return f"Error: Limited data found for {query}"

time.sleep(1) # Simulate API call

return f"Successfully fetched comprehensive data for: {query}\nKey \

insights include trends in neural networks, reinforcement learning,\

and transformer models."

# Define fallback procedure

def use_fallback_data(query: str) -> dict:

"""Provide fallback data when primary source fails."""

logger.info(f"Using fallback data source for: {query}")

return {

"query": query,

"result": "Based on cached information: AI trends include machine\

learning applications, large language models, and computer \

vision advances.",

"source": "Fallback system (cached data)",

"reliability": "medium"

}

# Create a simple agent

data_agent = Agent(

role="Data Retrieval Specialist",

goal="Retrieve requested information accurately",

backstory="You're an expert at finding and processing information \

from various sources",

tools=[fetch_data],

verbose=True

)

# Define a task

retrieval_task = Task(

description="Retrieve information about artificial intelligence trends",

expected_output="A summary of current AI trends",

agent=data_agent

)

# Create crew with single agent

data_crew = Crew(

agents=[data_agent],

tasks=[retrieval_task],

verbose=True,

process=Process.sequential

)

# Execute with error handling

MAX_RETRIES = 3

retry_count = 0

final_result = None

print("\n=== STARTING DATA RETRIEVAL WITH ERROR HANDLING ===\n")

while retry_count < MAX_RETRIES and final_result is None:

try:

# Attempt to run the task

logger.info(f"Attempt {retry_count + 1} to execute data retrieval task")

result = data_crew.kickoff()

# Check if result contains error indicators

if "error" in str(result).lower() or "limited data" in str(result).lower():

logger.warning("Quality issues detected in result")

# First attempts: Wait and retry

if retry_count < MAX_RETRIES - 1:

logger.info(f"Waiting before retry attempt {retry_count + 2}...")

time.sleep(2)

retry_count += 1

continue

# Last attempt: Use fallback

else:

logger.info("Using fallback after multiple low-quality results")

fallback_data = use_fallback_data("artificial intelligence trends")

final_result = f"After {MAX_RETRIES} attempts with \

quality issues, using fallback data:\n\

{fallback_data['result']}\n\

(Source: {fallback_data['source']})"

else:

# Success

logger.info("Successful high-quality result obtained")

final_result = result

except Exception as e:

# Log the error

logger.error(f"Task execution failed: {str(e)}")

# Retry logic

if retry_count < MAX_RETRIES - 1:

logger.info(f"Retrying after error... ({retry_count + 1}/{MAX_RETRIES})")

retry_count += 1

time.sleep(2)

else:

# All retries failed, use fallback

logger.warning("All retries failed due to errors, using fallback data")

fallback_data = use_fallback_data("artificial intelligence trends")

final_result = f"After {MAX_RETRIES} failed attempts, using \

fallback data:\n{fallback_data['result']}\n(Source: \

{fallback_data['source']})"

# Display the final result

print("\n=== FINAL DATA RETRIEVAL RESULT ===\n")

print(final_result)

print("\n=== ERROR HANDLING DEMONSTRATION COMPLETE ===\n")

This example demonstrates:

- Complete Working Example: A fully functional agent with error handling capabilities

- Realistic Tool Implementation: Simulates API failures that might occur in production

- Multi-Layer Error Management: Combines exception handling with quality assessment

- Retry Logic: Implements progressive retries before falling back to alternate solutions

- Fallback Mechanism: Provides alternative data sources when primary methods fail

- Quality Checking: Evaluates result content for indicators of low-quality responses

- Detailed Logging: Records each step and decision in the error handling process

- Graceful Degradation: Ensures users get useful results even when ideal data isn’t available

Progressive task complexity: Design your agent workflow to handle progressively complex tasks, building on simple foundations:

- Start with basic information gathering or preprocessing tasks

- Progress to analysis or transformation tasks

- Conclude with synthesis or decision-making tasks

Performance Optimization

Optimizing your Crew.ai implementation can significantly improve efficiency, reduce costs, and enhance results.

Efficient resource allocation:

- Use lightweight models for simple tasks and reserve powerful models for complex reasoning:

from crewai import Agent, LLM

# Tiered model approach

screening_agent = Agent(

role="Initial Screener",

llm=LLM(model="gpt-3.5-turbo"), # Lightweight model for basic tasks

goal="Filter and categorize incoming requests",

backstory="You quickly assess and route requests to appropriate specialists"

)

analysis_agent = Agent(

role="Complex Analyst",

llm=LLM(model="gpt-4"), # More powerful model for complex reasoning

goal="Perform detailed analysis of complex issues",

backstory="You excel at solving challenging problems with nuanced reasoning"

)

This optimization strategy:

- Matches Resources to Needs: Uses lighter models for simpler tasks

- Reduces Costs: Reserves expensive models only for complex reasoning

- Balances Performance: Optimizes for both speed and quality across the workflow

Implement caching for repetitive queries:

from crewai import Agent, Crew, Task, LLM

from langchain.cache import InMemoryCache, SQLiteCache

import langchain

import time

# Enable caching with in-memory storage for development

langchain.llm_cache = InMemoryCache()

# Or use persistent SQLite cache for production

# langchain.llm_cache = SQLiteCache(database_path="./cache/langchain.db")

# Create a custom LLM with cache monitoring

class CacheMonitorLLM(LLM):

def __init__(self, model="gpt-4", *args, **kwargs):

super().__init__(model=model, *args, **kwargs)

self.cache_hits = 0

self.cache_misses = 0

# Override predict method to monitor cache performance

def predict(self, prompt, *args, **kwargs):

# Generate a unique key to check cache status

start_time = time.time()

# Call the original predict method which utilizes LangChain's cache

result = super().predict(prompt, *args, **kwargs)

# Determine if it was a cache hit based on response time

# This is a simple heuristic - LLM calls take longer than cache lookups

execution_time = time.time() - start_time

# Assuming response time < 0.1s indicates a cache hit

# Adjust this threshold based on your environment

if execution_time < 0.1:

self.cache_hits += 1

print(f"🔵 Cache HIT - Response time: {execution_time:.4f}s")

else:

self.cache_misses += 1

print(f"🔴 Cache MISS - Response time: {execution_time:.4f}s")

return result

def get_cache_stats(self):

total = self.cache_hits + self.cache_misses

hit_ratio = self.cache_hits / total if total > 0 else 0

return {

"hits": self.cache_hits,

"misses": self.cache_misses,

"total": total,

"hit_ratio": f"{hit_ratio:.2%}"

}

# Initialize our custom LLM with cache monitoring

monitored_llm = CacheMonitorLLM(model="gpt-3.5-turbo")

# Create agents using the monitored LLM

research_agent = Agent(

role="Knowledge Researcher",

goal="Gather accurate information on technology topics",

backstory="You're an expert at researching cutting-edge tech developments",

llm=monitored_llm,

verbose=True

)

# Define identical tasks to demonstrate caching

tech_task_1 = Task(

description="What are the key features of large language models?",

expected_output="A concise summary of LLM features",

agent=research_agent

)

tech_task_2 = Task(

description="What are the key features of large language models?", # Identical query

expected_output="A concise summary of LLM features",

agent=research_agent

)

# Create a crew to execute the tasks

cache_demo_crew = Crew(

agents=[research_agent],

tasks=[tech_task_1, tech_task_2],

verbose=True

)

# Execute the workflow

print("\n=== STARTING CACHE DEMONSTRATION ===\n")

print("First execution (cache miss expected):")

cache_demo_crew.kickoff()

print("\n=== CACHE STATISTICS AFTER RUN ===\n")

print(f"Cache performance: {monitored_llm.get_cache_stats()}")

This caching example demonstrates:

- Complete Integration: Shows how to hook caching directly to Crew.ai agents

- Cache Performance Monitoring: Tracks and reports on cache hit/miss metrics

- Multiple Storage Options: Offers both in-memory (development) and SQLite (production) backends

- Custom LLM Extension: Extends Crew.ai’s LLM class to add cache monitoring

- Real-time Feedback: Provides visual indicators of cache operation during execution

- Cost Optimization: Demonstrates how identical queries avoid redundant LLM API calls

- Performance Statistics: Calculates and reports cache efficiency metrics

By implementing LLM response caching, you can achieve significant cost savings and performance improvements in Crew.ai applications, particularly those with repetitive queries or shared knowledge requirements across multiple tasks or runs.

Conclusion

Crew.ai represents a powerful paradigm shift in how we develop and deploy AI solutions. By enabling the orchestration of multiple specialized agents, it opens new possibilities for solving complex problems that were previously challenging for single-agent approaches.

As you continue to explore and implement Crew.ai in your projects, remember these key takeaways:

- Thoughtful agent design with clear roles and responsibilities is essential

- Tool integration extends agent capabilities in powerful ways

- Proper error handling and performance optimization create robust, efficient systems

- The crew structure enables complex workflows with specialized expertise at each stage

The future of AI lies in collaboration—both between humans and AI, and between multiple AI agents with complementary capabilities. Crew.ai provides a solid foundation for building these collaborative systems and pushing the boundaries of what’s possible in AI application development.