Coercing LLM Agents into Structured Responses using Pydantic AI

Introduction

In the rapidly evolving landscape of AI development, Large Language Models (LLMs) have become powerful tools for generating dynamic content and responses. However, ensuring consistency and reliability in LLM outputs remains a significant challenge. This is where Pydantic AI enters the picture, offering a robust solution for building LLM agents that generate structured, validated responses.

Pydantic AI combines the power of Pydantic’s data validation capabilities with LLM integration, enabling developers to create applications that produce reliable, type-safe outputs. By enforcing structured responses, developers can ensure their LLM applications maintain consistency and reliability across different use cases.

The benefits of using structured responses in LLM applications include:

- Guaranteed data format consistency

- Automatic validation of LLM outputs

- Reduced error handling complexity

- Improved application reliability

- Enhanced integration capabilities

Technical requirements:

- Python 3.7+

- pydantic-ai package

- Access to an LLM provider (e.g., OpenAI, Anthropic, Google)

- Basic understanding of Python and API integration

Understanding Pydantic AI

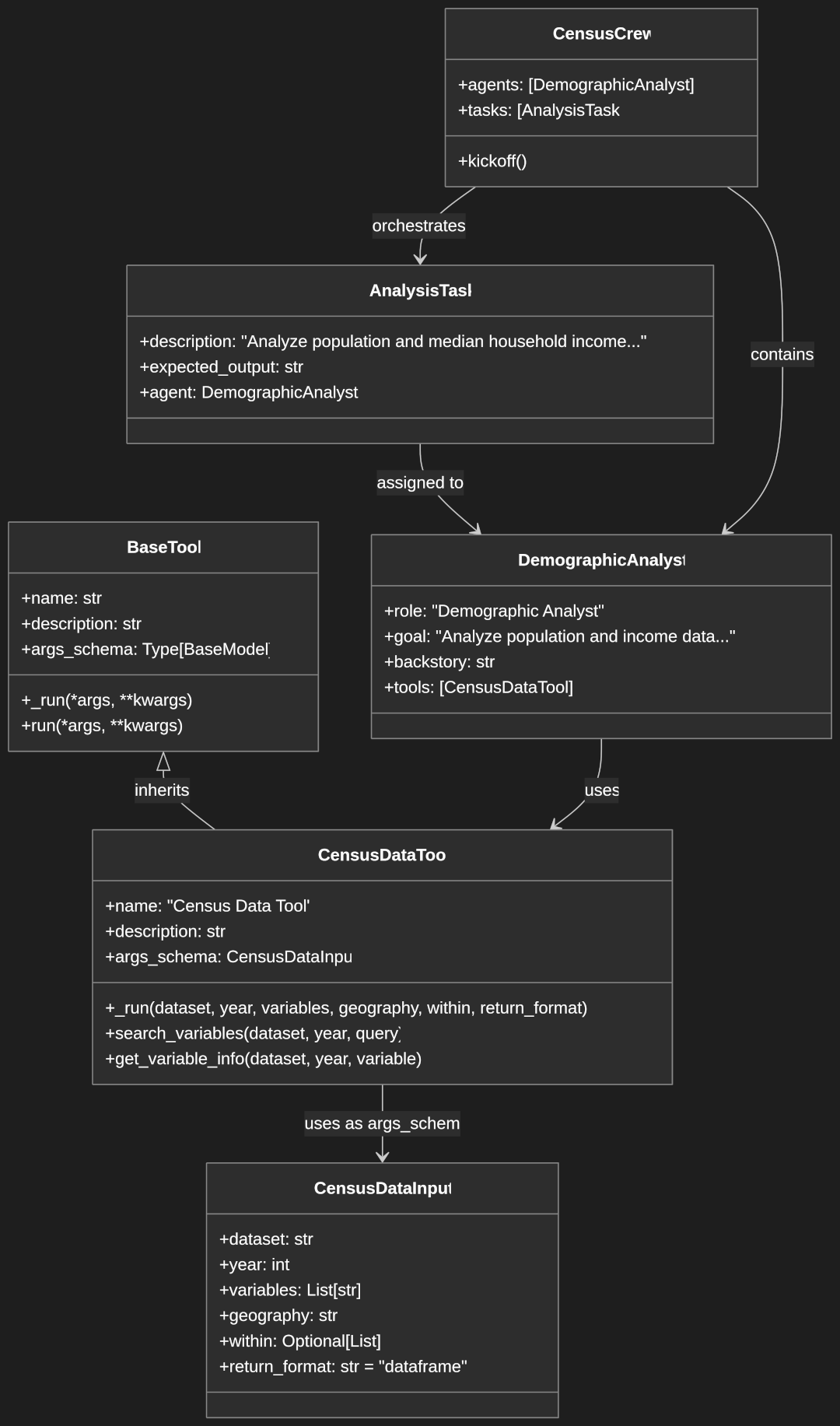

Core Concepts and Architecture

Pydantic AI builds upon Pydantic’s robust data validation framework, extending it specifically for LLM interactions. The architecture seamlessly integrates with various LLM providers while maintaining Pydantic’s core validation capabilities.

Read our guide on using Pydantic for data validation for more information on using Pydantic.

Key architectural components:

- Agent-based architecture for LLM interactions

- Integration with multiple LLM providers

- Built-in validation pipeline

- Type safety with Pydantic models

Key Features and Benefits

Pydantic AI offers several powerful features:

- Type Safety and Validation:

- Automatic type checking

- Field constraints enforcement

- Custom validation rules

- Error handling mechanisms

- Provider Integration:

- OpenAI support

- Anthropic support

- Google support

- Extensible provider system

- Structured Responses:

- Schema-based validation

- Documentation generation

- API integration support

- Agent-based Interaction:

- Simple API

- Synchronous and asynchronous operations

- Configurable system prompts

Getting Started

Installation and Setup

Begin by installing Pydantic AI:

pip install pydantic-ai

This command installs the pydantic-ai package, which provides the core functionality for structured LLM responses. The package builds on Pydantic’s validation system, extending it with LLM integration capabilities.

Configure your environment variables (depending on your provider):

export OPENAI_API_KEY="your-api-key"

# or

export ANTHROPIC_API_KEY="your-api-key"

# or

export GOOGLE_API_KEY="your-api-key"

Setting environment variables for API keys is a security best practice. It keeps sensitive credentials out of your code and allows for different configurations in development, testing, and production environments.

Basic Implementation

Create your first AI agent:

from pydantic_ai import Agent

from pydantic import BaseModel, Field

class SimpleResponse(BaseModel):

content: str

confidence: float = Field(ge=0, le=1)

tags: list[str]

# Set up the agent with output type

agent = Agent(

'openai:gpt-4',

system_prompt='Provide structured responses with high accuracy.',

output_type=SimpleResponse

)

# Generate a response

response = agent.run_sync('Summarize the benefits of renewable energy')

print(f"Content: {response.output.content}")

print(f"Confidence: {response.output.confidence}")

print(f"Tags: {response.output.tags}")

In this example:

- We define a

SimpleResponsePydantic model that specifies the expected structure of our LLM output - The model includes three fields: content (string), confidence (float between 0 and 1), and tags (list of strings)

- We create an Agent instance specifying:

- The LLM provider and model (

openai:gpt-4) - A system prompt that guides the LLM behavior

- The output type using our Pydantic model

- The LLM provider and model (

- The

run_syncmethod sends our prompt to the LLM and returns a structured response - We access the output properties through dot notation on

response.output

Building Structured Responses

Model Definition

Creating custom models involves defining the structure and constraints of your expected LLM responses:

from pydantic import BaseModel, Field

class ArticleGenerator(BaseModel):

title: str = Field(max_length=100)

content: str = Field(min_length=100)

keywords: list[str] = Field(min_items=3)

summary: str = Field(max_length=200)

This model definition:

- Creates an

ArticleGeneratorclass that inherits from Pydantic’sBaseModel - Defines four fields: title, content, keywords, and summary

- Adds validation constraints using Field:

- The title cannot exceed 100 characters

- The content must be at least 100 characters

- The keywords list must contain at least 3 items

- The summary cannot exceed 200 characters

- These constraints ensure the LLM output meets our specific requirements

Working with Responses

Handle response generation and validation:

from pydantic_ai import Agent

from pydantic import ValidationError

try:

agent = Agent('openai:gpt-4', output_type=ArticleGenerator)

result = agent.run_sync("Write about artificial intelligence")

article = result.output

print(f"Title: {article.title}")

print(f"Content: {article.content}")

except ValidationError as e:

print(f"Validation failed: {e}")

This code demonstrates:

- Creating an agent configured with our ArticleGenerator output type

- Using a try-except block to handle validation errors

- Running the agent with a simple prompt about artificial intelligence

- Accessing the structured fields (title, content) through the output object

- Error handling for validation failures, which occur if the LLM response doesn’t meet our constraints

Code Example: Basic Model Implementation

from pydantic_ai import Agent

from pydantic import BaseModel, Field, ValidationError

class ProductDescription(BaseModel):

name: str = Field(max_length=50)

description: str = Field(min_length=100)

features: list[str] = Field(min_items=3)

price_range: str

target_audience: str

# Usage example

try:

agent = Agent('anthropic:claude-3-5-sonnet-latest', output_type=ProductDescription)

result = agent.run_sync(

"""

Generate a product description for wireless headphones

targeting professional customers.

"""

)

product = result.output

print(product.model_dump())

except ValidationError as e:

print(f"Error: {e}")

This example shows:

- A more complex model with five fields, including specific constraints

- Using a different LLM provider (Anthropic Claude)

- A multi-line prompt with specific instructions

- Using

model_dump()to convert the response to a dictionary, which is useful for further processing or serialization - Consistent error handling pattern for validation failures

Advanced Features

Custom System Prompts

Pydantic AI allows for sophisticated prompt engineering:

from pydantic_ai import Agent

from pydantic import BaseModel

class CustomPromptModel(BaseModel):

response: str

category: str

confidence: float

agent = Agent(

'anthropic:claude-3-5-sonnet-latest',

system_prompt="""

You are a specialized analysis assistant.

Always provide:

1. A detailed response

2. A precise category classification

3. A confidence score between 0 and 1

""",

output_type=CustomPromptModel

)

result = agent.run_sync(

"Analyze the following text: The solar industry grew by 43% last year, with new installations reaching record highs."

)

This code demonstrates:

- Using a detailed system prompt to guide the LLM’s behavior

- The system prompt establishes the agent’s role and response format

- A model with three specific fields that match the instructions in the system prompt

- Using Claude for specialized analysis tasks

- The prompt is more targeted and context-specific

- The resulting output will have all three required components in a structured format

Nested Models

Complex data structures can be represented using nested models:

from pydantic_ai import Agent

from pydantic import BaseModel

class Address(BaseModel):

street: str

city: str

country: str

class Author(BaseModel):

name: str

bio: str

address: Address

class Book(BaseModel):

title: str

author: Author

synopsis: str

chapters: list[str]

agent = Agent('anthropic:claude-3-5-sonnet-latest', output_type=Book)

result = agent.run_sync("Generate a book about a detective in futuristic Tokyo")

book = result.output

This example illustrates:

- Creating nested Pydantic models for complex hierarchical data

Addressis a sub-model used within theAuthormodelAuthoris a sub-model used within theBookmodel- The LLM must generate a complete structured response including all nested components

- Accessing nested data is type-safe through dot notation:

book.author.address.city - This approach supports complex data structures while maintaining validation

Code Example: Advanced Implementation

from pydantic_ai import Agent

from pydantic import BaseModel, Field, validator

from typing import Any, Dict, List

class ContentGenerator(BaseModel):

title: str

sections: List[Dict[str, str]]

metadata: Dict[str, Any]

references: List[str]

@validator('sections')

def validate_sections(cls, v):

if len(v) < 2:

raise ValueError("Must have at least 2 sections")

return v

agent = Agent('openai:gpt-4-turbo', output_type=ContentGenerator)

result = agent.run_sync(

"""

Generate structured content about AI in Healthcare

Style: technical

Length: medium

Include references: yes

"""

)

content = result.output

This advanced example demonstrates:

- Using Python type hints with

ListandDictfor complex field types - Implementing a custom validator method using

@validatordecorator - The validator ensures the ‘sections’ list contains at least 2 items

- Working with dictionaries and lists in the model structure

- Using GPT-4 Turbo for complex content generation

- A structured prompt with specific formatting instructions

- The resulting output includes a complete content structure with metadata and references

Practical Use Cases

Content Generation Examples

- Article Generation:

from pydantic import BaseModel, Field

from pydantic_ai import Agent

class Article(BaseModel):

title: str

content: str

summary: str

keywords: list[str]

agent = Agent('openai:gpt-4', output_type=Article)

result = agent.run_sync(

"Write an article about renewable energy with focus on solar power"

)

article = result.output

This example shows:

- A practical use case for content generation

- A simple but effective model structure for articles

- Using GPT-4 for high-quality content creation

- A focused prompt that guides the content topic and emphasis

- The output provides a complete article with title, content, summary, and keywords

- Data Transformation:

from pydantic import BaseModel, validator

from pydantic_ai import Agent

class DataTransformer(BaseModel):

structured_data: dict

summary: str

categories: list[str]

@validator('structured_data')

def validate_structure(cls, v):

required_keys = ['main_points', 'details', 'conclusions']

if not all(key in v for key in required_keys):

raise ValueError("Missing required keys in structured data")

return v

agent = Agent('anthropic:claude-3-5-sonnet-latest', output_type=DataTransformer)

result = agent.run_sync(

"Transform this text into structured data: The company reported a 15% increase in revenue, primarily due to new product launches and market expansion efforts in Asia."

)

transformed = result.output

This code demonstrates:

- Using LLMs for data transformation and extraction

- A custom validator that ensures the dictionary contains specific required keys

- Using Claude for structured data extraction tasks

- The prompt provides both instructions and the text to be transformed

- The resulting output includes structured data with required sections, a summary, and categories

Troubleshooting and Maintenance

Common Issues and Solutions

- Validation Problems:

- Check field type definitions

- Verify constraint settings

- Review custom validators

- Monitor LLM response quality

- Performance Issues:

- Implement request timeouts

- Use connection pooling

- Monitor resource usage

- Optimize prompt length

- Integration Challenges:

- Verify API credentials

- Check network connectivity

- Monitor rate limits

- Implement proper error handling

Conclusion

Pydantic AI provides a powerful framework for building structured LLM agents that combine the flexibility of language models with the reliability of strong type checking and validation. By following the best practices and patterns outlined in this guide, developers can create robust, maintainable applications that leverage LLMs effectively.

Key takeaways:

- Use structured responses for consistent output

- Implement proper validation and error handling

- Follow performance optimization guidelines

- Maintain security best practices

Future considerations:

- Emerging LLM capabilities

- Enhanced validation features

- Improved prompt engineering

- Advanced caching strategies