Building a LangGraph.JS Agent in TypeScript with Memory

Introduction

LangGraph is a powerful extension of the LangChain ecosystem that enables developers to create sophisticated, stateful applications using language models. While the original Python-based LangGraph framework has been widely adopted, LangGraph.js brings this capability to JavaScript and TypeScript environments with some important distinctions.

LangGraph vs LangGraph.js: Key Differences

- Language Support: LangGraph is Python-focused, while LangGraph.js is designed specifically for JavaScript/TypeScript environments.

- Execution Model: LangGraph.js leverages JavaScript’s event-driven architecture and async/await patterns, whereas Python LangGraph uses asyncio.

- Integration Patterns: LangGraph.js integrates seamlessly with Node.js applications, web frameworks, and browser-based applications.

- TypeScript Benefits: LangGraph.js takes advantage of TypeScript’s strong typing system, providing better developer experience through improved code completion and compile-time error checking.

- Ecosystem Compatibility: LangGraph.js works natively with JavaScript libraries and frameworks, making it ideal for web and Node.js developers.

Built on top of LangChain and leveraging the LangChain Expression Language (LCEL), LangGraph.js provides a robust framework for implementing multi-agent systems with advanced memory capabilities in JavaScript environments.

In this tutorial, we’ll explore how to build a TypeScript LangGraph agent that effectively utilizes both short-term and long-term memory systems. This combination allows our agent to maintain immediate context while building lasting knowledge bases.

Prerequisites

- Node.js (v18 or higher)

- TypeScript knowledge

- Basic understanding of LangChain

- ChromaDB vector database – Quickly Deploy ChromaDB with Docker Compose

What We’ll Build

We’ll create an agent that can:

- Maintain conversation context using short-term memory

- Store and retrieve long-term knowledge using vector storage

- Seamlessly integrate both memory types for intelligent responses

Setting Up the Development Environment

First, let’s set up our development environment with the necessary dependencies.

# Create project directory

mkdir langgraph-agent-memory

cd langgraph-agent-memory

# Initialize package.json

npm init -y

# Install LangChain core dependencies

npm install langchain @langchain/openai @langchain/core

# Install LangGraph

npm install @langchain/langgraph

# Install community extensions and Chroma vector store client

npm install @langchain/community chromadb

# Install TypeScript and Node types

npm install typescript @types/node --save-dev

# Install environment variable management

npm install dotenv

Don’t forget to create a .env file in your project root to store your OpenAI API key:

OPENAI_API_KEY=your-api-key-here

CHROMADB_URL=http://localhost:8000/

Create a basic TypeScript configuration file at tsconfig.json:

{

"compilerOptions": {

"target": "ES2020",

"module": "commonjs",

"strict": true,

"esModuleInterop": true,

"skipLibCheck": true,

"forceConsistentCasingInFileNames": true,

"outDir": "./dist",

"rootDir": "./src"

},

"include": ["src/**/*"]

}

Project structure we’ll be creating:

/langgraph-agent-memory

├── src/

│ ├── agent.ts # Base agent implementation

│ ├── index.ts # Practical example implementation

│ ├── run.ts # Script to run the conversational agent

│ ├── memory/

│ │ ├── shortTerm.ts # Short-term memory implementation

│ │ └── longTerm.ts # Long-term memory implementation

│ ├── services/

│ │ └── conversationalAgent.ts # Conversational agent service

│ └── utils/

│ ├── memoryManager.ts # Memory management utilities

│ ├── memoryFlow.ts # Memory flow handling

│ ├── processingNode.ts # Processing logic implementation

│ └── memoryErrorHandler.ts # Error handling and debugging

├── tsconfig.json # TypeScript configuration

└── package.json # Project dependencies and scripts

Understanding Memory Types

Short-term Memory Overview

Short-term memory in LangGraph is implemented using BufferMemory, which maintains a temporary conversation context. This is crucial for maintaining coherent conversations and immediate context awareness.

Create a file for short-term memory implementation:

// filepath: src/memory/shortTerm.ts

import { BufferMemory } from "langchain/memory";

export function createShortTermMemory() {

const shortTermMemory = new BufferMemory({

returnMessages: true,

memoryKey: "chat_history",

inputKey: "input"

});

return shortTermMemory;

}

export class ShortTermOperations {

async storeContext(memory: BufferMemory, input: string, response: string) {

await memory.saveContext({

input: input,

}, {

output: response

});

}

async retrieveRecentHistory(memory: BufferMemory) {

const history = await memory.loadMemoryVariables({});

return history.chat_history;

}

async clearOldContexts(memory: BufferMemory) {

await memory.clear();

}

}

Understanding the Short-term Memory Implementation

The short-term memory system serves as the agent’s immediate conversational context with two main parts:

createShortTermMemoryFunction:- Creates a

BufferMemoryinstance configured to store and retrieve conversation history - Sets

returnMessages: trueto ensure the history is returned as a structured message array - Uses

memoryKey: "chat_history"to set where conversations will be stored - Uses

inputKey: "input"to specify which input will be saved from user queries

- Creates a

ShortTermOperationsClass:- Provides utility methods for managing the memory lifecycle

storeContext: Saves new interactions between user input and agent responsesretrieveRecentHistory: Retrieves all existing conversation messagesclearOldContexts: Allows pruning or erasing memory when needed

This implementation serves as the “working memory” of our agent, keeping track of recent interactions to maintain conversation continuity.

Long-term Memory Overview

Long-term memory utilizes vector stores to persist information across sessions. This allows the agent to build and maintain a knowledge base over time.

Create a file for long-term memory implementation:

// filepath: src/memory/longTerm.ts

import { BaseRetriever } from "@langchain/core/retrievers";

import { Chroma } from "@langchain/community/vectorstores/chroma";

import { OpenAIEmbeddings } from "@langchain/openai";

import { Document } from "@langchain/core/documents";

import { ChromaClient } from "chromadb";

export async function createLongTermMemory(collectionName = "agent_knowledge") {

// Create a vector store

const embeddings = new OpenAIEmbeddings();

try {

// Try to use existing collection

const vectorStore = await Chroma.fromExistingCollection(

embeddings,

{

collectionName,

url: process.env.CHROMADB_URL || "http://localhost:8000",

}

);

const longTermMemory = vectorStore.asRetriever();

return { longTermMemory, vectorStore };

} catch (error) {

// Create new collection if not found

try {

const vectorStore = await Chroma.fromTexts(

["Initial knowledge repository"],

[{ source: "setup" }],

embeddings,

{

collectionName,

url: process.env.CHROMADB_URL || "http://localhost:8000",

}

);

const longTermMemory = vectorStore.asRetriever();

return { longTermMemory, vectorStore };

} catch (innerError) {

console.error("Failed to create ChromaDB collection");

throw new Error("Failed to initialize ChromaDB for long-term memory");

}

}

}

export async function initializeEmptyLongTermMemory(collectionName = "agent_knowledge") {

const embeddings = new OpenAIEmbeddings();

const vectorStore = await Chroma.fromTexts(

["Initial knowledge repository"],

[{ source: "setup" }],

embeddings,

{

collectionName,

url: process.env.CHROMADB_URL || "http://localhost:8000",

}

);

return vectorStore.asRetriever();

}

export class LongTermOperations {

private vectorStore: Chroma;

private embeddings: OpenAIEmbeddings;

constructor(vectorStore: Chroma, embeddings: OpenAIEmbeddings) {

this.vectorStore = vectorStore;

this.embeddings = embeddings;

}

async storeInformation(data: string, metadata: Record<string, any> = {}) {

const doc = new Document({

pageContent: data,

metadata

});

await this.vectorStore.addDocuments([doc]);

}

async queryKnowledge(query: string, k: number = 5) {

const results = await this.vectorStore.similaritySearch(query, k);

return results;

}

}

Understanding the Long-term Memory Implementation

The long-term memory system leverages ChromaDB as a vector database to store and retrieve knowledge persistently:

createLongTermMemoryFunction:- Attempts to connect to an existing ChromaDB collection first

- Falls back to creating a new collection if none exists

- Uses OpenAI embeddings to convert text into vector representations

- If you wish to locally create embeddings read our guide on Creating Local Vector Embeddings with Transformers.js

- Returns both the retriever (for querying) and the vectorStore (for management).

initializeEmptyLongTermMemoryFunction:- Creates a fresh ChromaDB collection with a single initial document

- Useful for resetting the memory or starting from scratch

LongTermOperationsClass:storeInformation: Adds new knowledge to the vector database with metadataqueryKnowledge: Retrieves the most semantically similar documents for a given query

This implementation provides persistent knowledge storage that survives between sessions and can be semantically searched, unlike the short-term memory which only maintains recent conversation flow.

Memory Interaction Patterns

The two memory systems work together through a coordinated approach. Let’s create a memory manager to handle this interaction:

// filepath: src/utils/memoryManager.ts

import { BufferMemory } from "langchain/memory";

import { Chroma } from "@langchain/community/vectorstores/chroma";

import { OpenAIEmbeddings } from "@langchain/openai";

import { BaseRetriever } from "@langchain/core/retrievers";

import { ShortTermOperations } from '../memory/shortTerm';

import { LongTermOperations } from '../memory/longTerm';

export class MemoryManager {

static async createMemories(collectionName = "agent_knowledge") {

// Create short-term memory

const shortTerm = new BufferMemory({

memoryKey: "chat_history",

returnMessages: true,

inputKey: "input"

});

try {

// Create long-term memory with vector store

const embeddings = new OpenAIEmbeddings();

try {

// Try to connect to existing collection

const vectorStore = await Chroma.fromExistingCollection(

embeddings,

{

collectionName,

url: process.env.CHROMADB_URL || "http://localhost:8000",

}

);

const longTerm = vectorStore.asRetriever();

return { shortTerm, longTerm, vectorStore };

} catch (error) {

// Create new collection if not found

const vectorStore = await Chroma.fromTexts(

["Initial knowledge repository"],

[{ source: "setup" }],

embeddings,

{

collectionName,

url: process.env.CHROMADB_URL || "http://localhost:8000",

}

);

const longTerm = vectorStore.asRetriever();

return { shortTerm, longTerm, vectorStore };

}

} catch (error) {

console.warn("Failed to initialize ChromaDB for long-term memory, falling back to short-term only mode");

console.error(error);

// Return null for longTerm and vectorStore in case of failure

return { shortTerm, longTerm: null, vectorStore: null };

}

}

static async persistMemory(vectorStore: Chroma | null) {

if (!vectorStore) {

console.warn("No vector store available, skipping memory persistence");

return;

}

try {

// Ensure the vector store is persisted

await vectorStore.ensureCollection();

console.log("Memory persisted successfully");

} catch (error) {

console.error("Failed to persist memory:", error);

}

}

static async optimizeMemory(shortTermMemory: BufferMemory) {

// Example of pruning old conversations when they exceed a threshold

const memoryVariables = await shortTermMemory.loadMemoryVariables({});

if (memoryVariables.chat_history.length > 20) {

// Keep only the last 10 messages

const recent = memoryVariables.chat_history.slice(-10);

await shortTermMemory.clear();

// Re-add the recent messages

for (let i = 0; i < recent.length; i += 2) {

const input = recent[i].content;

const output = recent[i + 1]?.content || "";

await shortTermMemory.saveContext({ input }, { output });

}

}

}

}

export class CombinedMemoryManager {

private shortTerm: ShortTermOperations;

private longTerm: LongTermOperations;

private shortTermMemory: BufferMemory;

private longTermRetriever: BaseRetriever;

constructor(shortTermMemory: BufferMemory, longTermRetriever: BaseRetriever) {

this.shortTermMemory = shortTermMemory;

this.longTermRetriever = longTermRetriever;

this.shortTerm = new ShortTermOperations();

this.longTerm = new LongTermOperations(

(longTermRetriever as any).vectorStore as Chroma,

new OpenAIEmbeddings()

);

}

async processInput(input: string) {

// Get recent context

const shortTermContext = await this.shortTerm.retrieveRecentHistory(this.shortTermMemory);

// Get relevant long-term knowledge

const longTermContext = await this.longTermRetriever.getRelevantDocuments(input);

return this.mergeContexts(shortTermContext, longTermContext);

}

private mergeContexts(shortTerm: any, longTerm: any) {

// Implement priority-based context merging

return {

immediate: shortTerm,

background: longTerm

};

}

}

Understanding the Memory Manager Implementation

The memory management system coordinates both memory types and provides utility functions:

MemoryManagerClass – Handles setup and maintenance:createMemories: Initializes both memory systems with proper error handlingpersistMemory: Ensures vector store data is saved properly to ChromaDBoptimizeMemory: Prevents short-term memory overflow by pruning older messages

CombinedMemoryManagerClass – Handles runtime memory operations:processInput: Retrieves and combines context from both memory systemsmergeContexts: Intelligently combines immediate conversation context with background knowledge

This two-tiered approach separates system-level memory operations (initialization, persistence) from runtime memory management (context retrieval, merging), creating a clean architecture that’s easier to maintain and extend.

Building the Base Agent

Core Agent Structure

Let’s implement our base agent class with LangGraph.js:

// filepath: src/agent.ts

import { StateGraph, END } from "@langchain/langgraph";

import { ChatOpenAI } from "@langchain/openai";

import { BufferMemory } from "langchain/memory";

import { BaseRetriever } from "@langchain/core/retrievers";

import { Chroma } from "@langchain/community/vectorstores/chroma";

// Define the state type

type AgentState = {

input: string;

chat_history: any[];

long_term_knowledge: any[];

response?: string;

};

export class TypeScriptAgent {

private shortTermMemory: BufferMemory;

private longTermMemory: BaseRetriever | null;

private llm: ChatOpenAI;

private vectorStore: Chroma | null;

public graph: any; // Using 'any' to avoid TypeScript errors

constructor(config: {

shortTermMemory: BufferMemory,

longTermMemory?: BaseRetriever | null,

llm?: ChatOpenAI,

vectorStore?: Chroma

}) {

this.shortTermMemory = config.shortTermMemory;

this.longTermMemory = config.longTermMemory || null;

this.llm = config.llm || new ChatOpenAI({ modelName: "gpt-4" });

this.vectorStore = config.vectorStore;

// Setup the graph

this.setupGraph();

}

private setupGraph(): void {

console.log("Setting up LangGraph state graph...");

// Create the state graph

const builder = new StateGraph<AgentState>({

channels: {

input: { value: "" },

chat_history: { value: [] },

long_term_knowledge: { value: [] },

response: { value: undefined }

}

});

// Add nodes

builder.addNode("retrieve_memory", {

invoke: async (state: AgentState) => {

try {

console.log("Retrieving memories for input:", state.input);

// Check if we already have chat history in the state

let chatHistory = state.chat_history || [];

// If no chat history in state, try to load from memory

if (!chatHistory || chatHistory.length === 0) {

console.log("No chat history in state, loading from memory");

const memoryVars = await this.shortTermMemory.loadMemoryVariables({});

chatHistory = memoryVars.chat_history || [];

}

console.log("Chat history for context:",

Array.isArray(chatHistory) ?

`${chatHistory.length} messages` :

"No messages");

// Get long-term memory if available

let longTermResults = state.long_term_knowledge || [];

// If no long-term knowledge in state or we need fresh results, query the retriever

if ((!longTermResults || longTermResults.length === 0) && this.longTermMemory) {

try {

console.log("Retrieving additional long-term memory...");

const newResults = await this.longTermMemory.getRelevantDocuments(state.input);

longTermResults = [...longTermResults, ...newResults];

console.log(`Retrieved ${longTermResults.length} total documents from long-term memory`);

} catch (e) {

console.warn("Error retrieving from long-term memory:", e);

}

} else if (!this.longTermMemory) {

console.log("No long-term memory available, skipping retrieval");

}

return {

chat_history: chatHistory,

long_term_knowledge: longTermResults

};

} catch (error) {

console.error("Error in retrieve_memory node:", error);

return {

chat_history: state.chat_history || [],

long_term_knowledge: state.long_term_knowledge || []

};

}

}

});

// Store the last generated response for direct access

let lastGeneratedResponse: string = "";

builder.addNode("generate_response", {

invoke: async (state: AgentState) => {

try {

console.log("Generating response...");

// Format the context with improved handling of different message formats

const formatContext = (history: any[], knowledge: any[]) => {

let context = "Chat history:\n";

if (Array.isArray(history)) {

// Log the raw history for debugging

history.forEach((msg, index) => {

// Handle different message formats

if (msg.type && msg.content) {

// Standard LangChain message format

context += `${msg.type}: ${msg.content}\n`;

} else if (msg.human && msg.ai) {

// Some memory formats store as {human, ai} pairs

context += `Human: ${msg.human}\nAI: ${msg.ai}\n`;

} else if (msg.input && msg.output) {

// Some memory formats store as {input, output} pairs

context += `Human: ${msg.input}\nAI: ${msg.output}\n`;

} else if (typeof msg === "string") {

// Simple string format

// Alternate between human/ai roles for simple strings

const role = index % 2 === 0 ? "Human" : "AI";

context += `${role}: ${msg}\n`;

} else if (msg.role && msg.content) {

// OpenAI message format

const role = msg.role === "user" ? "Human" :

msg.role === "assistant" ? "AI" :

msg.role.charAt(0).toUpperCase() + msg.role.slice(1);

context += `${role}: ${msg.content}\n`;

} else {

// Try to extract any text we can find

const msgStr = JSON.stringify(msg);

if (msgStr && msgStr.length > 2) { // Not just "{}"

context += `Message: ${msgStr}\n`;

}

}

});

}

context += "\nLong-term knowledge:\n";

if (Array.isArray(knowledge)) {

knowledge.forEach(doc => {

if (doc.pageContent) {

context += `${doc.pageContent}\n`;

} else if (typeof doc === "string") {

context += `${doc}\n`;

} else if (doc.text) {

context += `${doc.text}\n`;

} else {

// Try to extract any text we can find

const docStr = JSON.stringify(doc);

if (docStr && docStr.length > 2) { // Not just "{}"

context += `${docStr}\n`;

}

}

});

}

return context;

};

const context = formatContext(state.chat_history, state.long_term_knowledge);

console.log("Invoking LLM with context and user input...");

// Generate response with context

const response = await this.llm.invoke(

`Context:\n${context}\n\nUser question: ${state.input}\n\nRespond to the user:`

);

// Extract the response text and store it for direct access

const responseText = response.content || response.text || "";

lastGeneratedResponse = responseText.toString();

console.log("Generated response");

// Store the response in the class instance for direct access

(this as any)._lastResponse = lastGeneratedResponse;

return {

response: lastGeneratedResponse || "I couldn't generate a response."

};

} catch (error) {

console.error("Error in generate_response node:", error);

const errorResponse = "I encountered an error while generating a response.";

lastGeneratedResponse = errorResponse;

(this as any)._lastResponse = errorResponse;

return {

response: errorResponse

};

}

}

});

builder.addNode("update_memory", {

invoke: async (state: AgentState) => {

try {

console.log("Updating memory with new conversation...");

// Get the response from the state or from the last generated response

let responseToSave = state.response;

// If no response in state, try to get it from the class instance

if (!responseToSave && (this as any)._lastResponse) {

console.log("Using directly stored LLM response for memory update");

responseToSave = (this as any)._lastResponse;

}

if (responseToSave) {

console.log("Saving to memory");

// Update short-term memory

await this.shortTermMemory.saveContext(

{ input: state.input },

{ output: responseToSave }

);

console.log("Updated short-term memory");

// Update long-term memory if needed

if (this.vectorStore && responseToSave.length > 50) {

try {

await this.vectorStore.addDocuments([

{

pageContent: `Q: ${state.input}\nA: ${responseToSave}`,

metadata: { source: "conversation" }

}

]);

console.log("Updated long-term memory");

} catch (e) {

console.warn("Failed to update long-term memory:", e);

}

} else {

console.log("Skipping long-term memory update (no vector store or response too short)");

}

// Return the response in the state to ensure it's passed along

return { response: responseToSave };

} else {

console.warn("No response to save to memory");

return {};

}

} catch (error) {

console.error("Error in update_memory node:", error);

return {};

}

}

});

// Define the flow

builder.addEdge("retrieve_memory", "generate_response");

builder.addEdge("generate_response", "update_memory");

builder.addEdge("update_memory", END);

// Set the entry point

builder.setEntryPoint("retrieve_memory");

// Compile the graph

this.graph = builder.compile();

}

// Add a custom invoke method to handle running the graph

async invoke(input: string): Promise<any> {

console.log("TypeScriptAgent.invoke called with input:", input);

// Reset the last response

(this as any)._lastResponse = null;

// Load existing memory before creating the initial state

console.log("Loading existing memory for initial state");

const existingMemory = await this.shortTermMemory.loadMemoryVariables({});

console.log("Existing memory loaded");

// Get long-term memory if available

let longTermResults = [];

if (this.longTermMemory) {

try {

console.log("Pre-loading long-term memory for initial state...");

longTermResults = await this.longTermMemory.getRelevantDocuments(input);

console.log(`Pre-loaded ${longTermResults.length} documents from long-term memory`);

} catch (e) {

console.warn("Error pre-loading from long-term memory:", e);

}

}

// Create initial state with existing memory

const initialState = {

input: input,

chat_history: existingMemory.chat_history || [],

long_term_knowledge: longTermResults

};

try {

console.log("Attempting to invoke graph with initial state");

// Try using the 'invoke' method

const result = await this.graph.invoke(initialState);

console.log("Graph invoke successful");

// First check if we have a direct response stored from the LLM

if ((this as any)._lastResponse) {

console.log("Using directly stored LLM response");

result.response = (this as any)._lastResponse;

}

// If no direct response, check if response is in the generate_response node

else if (!result.response && result.generate_response && result.generate_response.response) {

console.log("Extracting response from generate_response node");

result.response = result.generate_response.response;

}

// If still no response, check if we have a final state with response

else if (!result.response && this.graph.getStateHistory) {

console.log("Attempting to extract response from state history");

const stateHistory = this.graph.getStateHistory();

if (stateHistory && stateHistory.length > 0) {

const finalState = stateHistory[stateHistory.length - 1];

if (finalState && finalState.response) {

result.response = finalState.response;

}

}

}

// If still no response, try to extract one from the result

if (!result.response) {

console.warn("No response found in result");

// Try to find a response in any string property

const possibleResponseKeys = Object.keys(result).filter(key =>

typeof result[key] === 'string' &&

result[key].length > 0 &&

key !== 'input'

);

if (possibleResponseKeys.length > 0) {

result.response = result[possibleResponseKeys[0]];

} else {

result.response = "I couldn't generate a response.";

}

}

return result;

} catch (e) {

console.warn("Graph invoke failed, trying run method instead:", e);

try {

// Fallback to the 'run' method

const result = await this.graph.run(initialState);

console.log("Graph run successful");

// First check if we have a direct response stored from the LLM

if ((this as any)._lastResponse) {

console.log("Using directly stored LLM response (run method)");

result.response = (this as any)._lastResponse;

}

// If no direct response, check other sources

else if (!result.response && result.generate_response && result.generate_response.response) {

result.response = result.generate_response.response;

}

// If still no response, try to extract one

if (!result.response) {

// Try to find a response in any string property

const possibleResponseKeys = Object.keys(result).filter(key =>

typeof result[key] === 'string' &&

result[key].length > 0 &&

key !== 'input'

);

if (possibleResponseKeys.length > 0) {

result.response = result[possibleResponseKeys[0]];

} else {

result.response = "I couldn't generate a response.";

}

}

return result;

} catch (e2) {

// Final fallback - create a simple response

console.error("Graph execution failed completely:", e2);

// If we have a direct response from the LLM, use it even if the graph failed

if ((this as any)._lastResponse) {

return { response: (this as any)._lastResponse };

}

return { response: "I'm having trouble with my memory systems. Please try again." };

}

}

}

async getShortTermState() {

return await this.shortTermMemory.loadMemoryVariables({});

}

async getLongTermStats() {

if (!this.vectorStore) return { documentCount: 0 };

const count = await this.vectorStore.collection.count();

return { documentCount: count };

}

}

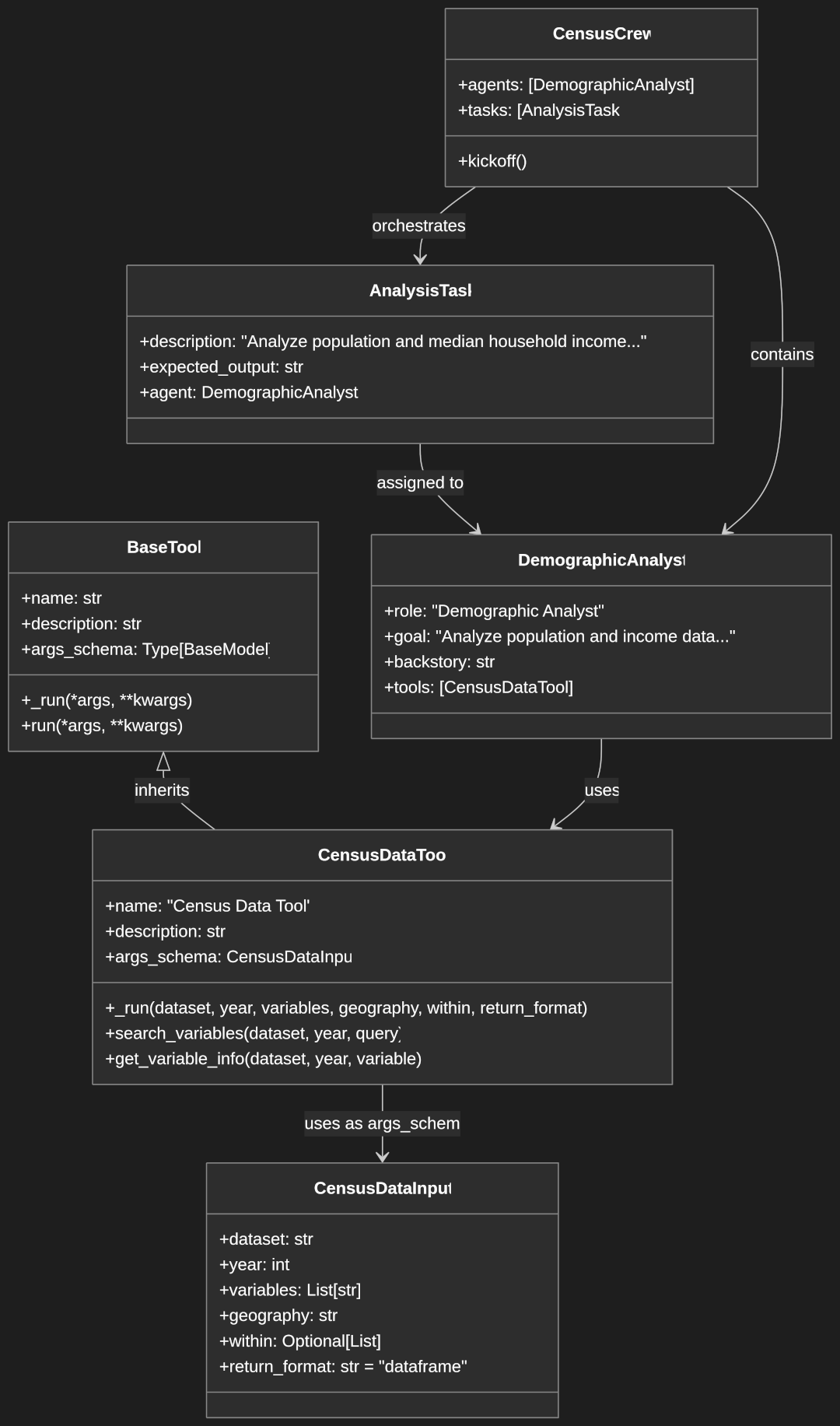

Understanding the LangGraph Node Structure

The TypeScriptAgent uses LangGraph to create a directed graph with three core nodes:

retrieve_memoryNode:- Loads conversation history from short-term memory

- Retrieves relevant documents from long-term memory

- Handles error cases gracefully by providing empty arrays if retrieval fails

- Prepares both memory types for use in generating a response

generate_responseNode:- Formats all collected context (chat history and long-term knowledge)

- Handles various message formats that might be present in memory

- Prompts the LLM with both the context and the user’s input

- Stores the generated response for direct access in case the graph execution has issues

update_memoryNode:- Updates short-term memory with the new conversation turn

- Conditionally stores important information in long-term memory

- Implements safeguards to handle missing responses

- Returns the response to ensure it persists through the graph execution

The graph edges flow from retrieve_memory → generate_response → update_memory → END, creating a linear processing flow for each user input.

Processing Logic Implementation

Create a file for implementing the processing logic:

// filepath: src/utils/processingNode.ts

import { OpenAI } from "@langchain/openai";

import { CombinedMemoryManager } from "./memoryManager";

export class ProcessingNode {

private memoryManager: CombinedMemoryManager;

private llm: OpenAI;

constructor(memoryManager: CombinedMemoryManager, llm: OpenAI) {

this.memoryManager = memoryManager;

this.llm = llm;

}

async process(input: string) {

// Combine memory contexts

const combinedContext = await this.memoryManager.processInput(input);

// Format context for LLM

const formattedContext = this.formatContextForLLM(

combinedContext.immediate,

combinedContext.background

);

// Generate response using LLM

const response = await this.llm.invoke(

`Context information: ${formattedContext}\n\nUser input: ${input}\n\nProvide a helpful response:`

);

return response.content;

}

private formatContextForLLM(shortTerm: any, longTerm: any[]): string {

let context = "Recent conversation:\n";

if (Array.isArray(shortTerm)) {

shortTerm.forEach(item => {

context += `${item.type}: ${item.content}\n`;

});

}

context += "\nRelevant knowledge:\n";

longTerm.forEach(doc => {

context += `- ${doc.pageContent}\n`;

});

return context;

}

}

Understanding the Processing Node

The ProcessingNode class serves as an alternative approach to handling user queries without using LangGraph’s full state graph system:

- Initialization:

- Takes a CombinedMemoryManager for memory access and an LLM for response generation

- Creates a clean separation of concerns between memory retrieval and content generation

- Processing Flow:

processmethod: The main entry point that orchestrates the response generation- First retrieves context from both memory systems via the CombinedMemoryManager

- Then formats the context into a structured prompt for the LLM

- Finally, generates and returns a coherent response

- Context Formatting:

formatContextForLLMhandles converting complex memory structures into text- Creates a clear distinction between conversation history and knowledge base content

This component could be used as a simpler alternative to the full LangGraph implementation or for testing/comparison purposes.

Memory Flow Management

Create a file for managing memory flow:

// filepath: src/utils/memoryFlow.ts

import { BufferMemory } from "langchain/memory";

import { ShortTermOperations } from '../memory/shortTerm';

import { LongTermOperations } from '../memory/longTerm';

import { Chroma } from "@langchain/community/vectorstores/chroma";

import { OpenAIEmbeddings } from "@langchain/openai";

export class MemoryFlow {

private shortTermOps: ShortTermOperations;

private longTermOps: LongTermOperations;

private shortTermMemory: BufferMemory;

private vectorStore: Chroma;

constructor(shortTermMemory: BufferMemory, vectorStore: Chroma) {

this.shortTermMemory = shortTermMemory;

this.vectorStore = vectorStore;

this.shortTermOps = new ShortTermOperations();

this.longTermOps = new LongTermOperations(vectorStore, new OpenAIEmbeddings());

}

async manage(input: string, response: string) {

// Always store in short-term memory

await this.shortTermOps.storeContext(

this.shortTermMemory,

input,

response

);

// Evaluate importance for long-term storage

if (this.shouldStoreInLongTerm(input, response)) {

await this.longTermOps.storeInformation(

`Q: ${input}\nA: ${response}`,

{ source: "conversation", timestamp: new Date().toISOString() }

);

}

}

private shouldStoreInLongTerm(input: string, response: string): boolean {

// Implement your logic to determine what's worth keeping long-term

// This is a simple example - you might use more sophisticated heuristics

const combined = input + response;

return (

combined.length > 100 || // Longer exchanges might be more valuable

input.includes("remember") || // Explicit request to remember

response.includes("important") // Content deemed important

);

}

}

Understanding Memory Flow Management

The MemoryFlow class implements a sophisticated approach to memory management by determining what information should be stored where:

- Selective Memory Storage:

- Always stores conversations in short-term memory for immediate context

- Selectively stores important information in long-term memory based on criteria

- Storage Decision Logic:

shouldStoreInLongTermmethod implements heuristics to determine what’s worth keeping- Considers length, explicit requests to remember, and content importance

- This prevents the long-term memory from being filled with trivial conversation

- Memory Operations:

- Uses the specialized operations classes for each memory type

- Adds metadata like timestamps and source information to long-term storage

This intelligent filtering approach ensures the agent builds a valuable knowledge base over time without storing every conversation fragment, which would dilute the quality of retrieved knowledge.

Error Handling and Debugging

Create a file for error handling and debugging:

// filepath: src/utils/memoryErrorHandler.ts

import { BufferMemory } from "langchain/memory";

import fs from 'fs/promises';

export class MemoryErrorHandler {

async handleMemoryError(operation: () => Promise<any>, fallback: any) {

try {

return await operation();

} catch (error) {

console.error('Memory operation error:', error);

return fallback;

}

}

async handleShortTermOverflow(memory: BufferMemory, threshold: number = 20) {

try {

const variables = await memory.loadMemoryVariables({});

if (variables.chat_history && variables.chat_history.length > threshold) {

// Keep only the most recent messages

const recent = variables.chat_history.slice(-Math.floor(threshold / 2));

await memory.clear();

// Re-add recent messages

for (let i = 0; i < recent.length; i += 2) {

if (i + 1 < recent.length) {

await memory.saveContext(

{ input: recent[i].content },

{ output: recent[i + 1].content }

);

}

}

}

} catch (error) {

console.error('Error handling memory overflow:', error);

}

}

async handleContextConfusion(shortTerm: any[], longTerm: any[]) {

// Implement context disambiguation

return {

prioritized: this.prioritizeContext(shortTerm, longTerm),

filtered: this.removeRedundancy(shortTerm, longTerm)

};

}

private prioritizeContext(shortTerm: any[], longTerm: any[]) {

// Prioritize short-term context over conflicting long-term information

return shortTerm;

}

private removeRedundancy(shortTerm: any[], longTerm: any[]) {

// Remove duplicated information between contexts

const shortTermContent = new Set(shortTerm.map(item =>

typeof item === 'string' ? item : item.content || item.pageContent

));

return longTerm.filter(item => {

const content = typeof item === 'string' ? item : item.pageContent;

return !shortTermContent.has(content);

});

}

}

Understanding Memory Error Handling

The MemoryErrorHandler class provides robust error handling and memory optimization:

- Error Resilience:

handleMemoryErrormethod provides a try/catch wrapper with fallback values- Ensures memory errors don’t crash the entire application

- Logs detailed error information for debugging

- Memory Overflow Protection:

handleShortTermOverflowprevents memory bloat by pruning conversation history- Implements a sliding window approach that keeps only recent messages

- Preserves conversation continuity while managing memory constraints

- Context Disambiguation:

handleContextConfusionresolves conflicts between memory typesprioritizeContextensures recent information takes precedence over outdated factsremoveRedundancyeliminates duplicate information between memory types

This comprehensive error handling approach significantly increases the agent’s reliability by preventing common memory-related failures and optimizing memory usage.

Creating a Practical Example

Let’s create a practical implementation file:

// filepath: src/index.ts

import { ChatOpenAI } from "@langchain/openai";

import { BufferMemory } from "langchain/memory";

import { MemoryManager } from './utils/memoryManager';

import { TypeScriptAgent } from './agent';

import dotenv from 'dotenv';

// Load environment variables

dotenv.config();

async function main() {

// Initialize OpenAI API key

const apiKey = process.env.OPENAI_API_KEY;

if (!apiKey) {

throw new Error("Please set the OPENAI_API_KEY environment variable");

}

console.log("Initializing agent...");

// Create memories

const { shortTerm, longTerm, vectorStore } = await MemoryManager.createMemories();

// Create LLM instance

const llm = new ChatOpenAI({ modelName: "gpt-4o-mini" });

// Create agent

const agent = new TypeScriptAgent({

shortTermMemory: shortTerm,

longTermMemory: longTerm,

llm,

vectorStore

});

console.log("Agent initialized successfully!");

// Test the agent

const queries = [

"What is LangGraph?",

"How does memory work in the agent?",

"Can you explain more about short-term memory?",

"What's the difference with long-term memory?"

];

// Run test queries

for (const query of queries) {

console.log(`\nProcessing query: "${query}"`);

// Use agent.invoke() instead of agent.graph.invoke()

const result = await agent.invoke(query);

console.log(`Response: ${result.response}`);

}

// Persist memory before exiting

await MemoryManager.persistMemory(vectorStore);

console.log("Test completed.");

}

// Execute the main function

main().catch(error => {

console.error("Error in main execution:", error);

process.exit(1);

});

To run the application:

npx tsx src/index.ts

Initializing agent...

Setting up LangGraph state graph...

Agent initialized successfully!

Processing query: "What is LangGraph?"

TypeScriptAgent.invoke called with input: What is LangGraph?

Loading existing memory for initial state

Existing memory loaded

Pre-loading long-term memory for initial state...

Pre-loaded 4 documents from long-term memory

Attempting to invoke graph with initial state

Retrieving memories for input: What is LangGraph?

No chat history in state, loading from memory

Chat history for context: 0 messages

Generating response...

Invoking LLM with context and user input...

Generated response

Updating memory with new conversation...

Using directly stored LLM response for memory update

Saving to memory

Updated short-term memory

Updated long-term memory

Graph invoke successful

Using directly stored LLM response

Response: LangGraph is a framework that integrates natural language processing with graph-based technologies, enabling users to analyze and understand complex relationships in textual data. It supports tasks such as semantic search, knowledge graph creation, and the extraction of insights from large volumes of text. LangGraph is useful in various applications, including enhancing search engines, developing chatbots, and converting unstructured data into structured knowledge for more informed decision-making. If you're interested in specific features or applications of LangGraph, feel free to ask!

Understanding the Example Implementation

The index.ts file provides an automated way to test our agent with a sequence of related queries:

- Initialization Process:

- Sets up both memory systems through the MemoryManager

- Creates an LLM instance with a specific model

- Initializes the TypeScriptAgent with all required components

- Test Sequence:

- Defines a series of related queries that build on each other

- The sequence progressively explores the agent’s topic understanding and memory usage

- Each query should demonstrate how previous information influences future responses

- Response Handling:

- Uses the agent’s custom

invoke()method to process each query - Ensures responses are properly extracted from the graph execution

- Displays the response for each query in sequence

- Uses the agent’s custom

- Memory Persistence:

- Persists memory to ChromaDB before exiting

- Ensures knowledge gained during the session isn’t lost

This automated testing approach allows developers to verify that the agent properly builds and maintains context across a conversation without manual interaction.

Full Example Implementation

To create a conversational agent service, we’ll create a dedicated implementation file:

// filepath: src/services/conversationalAgent.ts

import { TypeScriptAgent } from '../agent';

import { MemoryErrorHandler } from '../utils/memoryErrorHandler';

import { ChatOpenAI } from "@langchain/openai";

import { BufferMemory } from "langchain/memory";

import { BaseRetriever } from "@langchain/core/retrievers";

export class ConversationalAgent {

private agent: TypeScriptAgent;

private errorHandler: MemoryErrorHandler;

constructor(agent: TypeScriptAgent) {

this.agent = agent;

this.errorHandler = new MemoryErrorHandler();

}

async chat(userInput: string): Promise<string> {

try {

console.log("ConversationalAgent.chat called with input:", userInput);

// Use the custom invoke method instead of accessing graph.invoke directly

console.log("Invoking agent...");

const result = await this.agent.invoke(userInput);

if (!result) {

console.warn("No result returned from agent");

// Check if we have a direct LLM response stored in the agent

if ((this.agent as any)._lastResponse) {

console.log("Using directly stored LLM response from agent");

return (this.agent as any)._lastResponse;

}

return "I couldn't process your request. Please try again.";

}

if (!result.response) {

console.warn("No response property in result");

// Check if we have a direct LLM response stored in the agent

if ((this.agent as any)._lastResponse) {

console.log("Using directly stored LLM response from agent");

return (this.agent as any)._lastResponse;

}

// Try to extract a response from the result

if (result.generate_response && result.generate_response.response) {

console.log("Found response in generate_response node");

return result.generate_response.response;

}

// Check if we have any other property that might contain the response

const possibleResponseKeys = Object.keys(result).filter(key =>

typeof result[key] === 'string' &&

result[key].length > 0 &&

key !== 'input'

);

if (possibleResponseKeys.length > 0) {

console.log("Found possible response in property:", possibleResponseKeys[0]);

return result[possibleResponseKeys[0]];

}

console.warn("No suitable response found in result");

return "I couldn't generate a response";

}

return result.response;

} catch (error) {

console.error("Error in conversation:", error);

// Check if we have a direct LLM response stored in the agent even though there was an error

if ((this.agent as any)._lastResponse) {

console.log("Using directly stored LLM response from agent despite error");

return (this.agent as any)._lastResponse;

}

this.errorHandler.handleMemoryError(

async () => {

// Try to log the error to help with debugging

console.error("Detailed error:", JSON.stringify(error, null, 2));

return null;

},

null

);

return "I'm having trouble processing that request right now.";

}

}

async startConversation() {

return "Hello! I'm a LangGraph.js agent with memory. How can I help you today?";

}

}

// Usage example

export async function createConversationalService(

shortTermMemory: BufferMemory,

longTermMemory: BaseRetriever | null,

llm: ChatOpenAI,

vectorStore: any

) {

const agent = new TypeScriptAgent({

shortTermMemory,

longTermMemory,

llm,

vectorStore

});

return new ConversationalAgent(agent);

}

Understanding the Conversational Agent Service

The ConversationalAgent class provides a high-level API for interacting with our agent:

- Service Architecture:

- Wraps the TypeScriptAgent to provide a simpler interface

- Handles response extraction and error management

- Creates a clean separation between the agent implementation and user interaction

- Robust Response Handling:

- Implements multiple fallback strategies for extracting responses

- Checks for responses in various locations (direct storage, graph nodes, result properties)

- Provides meaningful fallback responses when extraction fails

- Error Resilience:

- Uses comprehensive try/catch blocks to prevent crashes

- Leverages the MemoryErrorHandler for specialized memory error handling

- Maintains a usable interface even when internal components fail

- Factory Function:

createConversationalServicesimplifies instantiation of the complete service- Centralizes the creation of all required components

- Makes integration with other applications straightforward

This service layer pattern creates a clean, high-level API that hides the complexity of the underlying agent architecture while providing robust error handling.

Running the Application

Finally, create a script to run the application:

// filepath: src/run.ts

import { ChatOpenAI } from "@langchain/openai";

import { MemoryManager } from './utils/memoryManager';

import { createConversationalService } from './services/conversationalAgent';

import * as readline from 'readline';

import dotenv from 'dotenv';

// Load environment variables

dotenv.config();

async function runConversation() {

// Initialize memories

const { shortTerm, longTerm, vectorStore } = await MemoryManager.createMemories();

// Create LLM

const llm = new ChatOpenAI({ modelName: "gpt-3.5-turbo" });

// Create conversational agent

const conversationAgent = await createConversationalService(

shortTerm,

longTerm,

llm,

vectorStore

);

// Create readline interface

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

// Start conversation

console.log("\n" + await conversationAgent.startConversation());

// Handle conversation loop

const askQuestion = () => {

rl.question('\nYou: ', async (input) => {

if (input.toLowerCase() === 'exit') {

await MemoryManager.persistMemory(vectorStore);

console.log("Conversation ended. Memory persisted.");

rl.close();

return;

}

const response = await conversationAgent.chat(input);

console.log(`\nAgent: ${response}`);

// Optimize memory every few interactions

if (Math.random() < 0.2) {

await MemoryManager.optimizeMemory(shortTerm);

}

askQuestion();

});

};

askQuestion();

}

console.log("Starting LangGraph Agent with Memory...");

runConversation().catch(error => {

console.error("Error running conversation:", error);

});

Understanding the Interactive Console Application

The run.ts file creates an interactive command-line interface for conversing with our agent:

- Setup Process:

- Initializes memory systems, LLM, and the conversational agent service

- Creates a readline interface for user input/output

- Starts with a welcome message from the agent

- Conversation Loop:

- Implements a recursive question/answer pattern with the

askQuestionfunction - Handles the “exit” command gracefully, persisting memory before closing

- Passes user input to the agent and displays responses

- Implements a recursive question/answer pattern with the

- Memory Optimization:

- Randomly triggers memory optimization to prevent excessive growth

- Demonstrates how maintenance can be integrated into normal operation

- Ensures the agent remains performant during extended conversations

- Error Handling:

- Wraps the entire conversation in a try/catch block

- Provides descriptive error messages if initialization fails

- Creates a smooth, uninterrupted user experience

This interactive implementation complements the automated testing in index.ts, providing a way for users to directly experience the agent’s memory capabilities through natural conversation.

Running the Project

To run the application:

npx tsx src/run.ts

- Tell the chatbot your name and occupation

- Exit and start a new session and ask “What is [insert name here]’s job”

- The chatbot should return with the correct answer

Conclusion

We’ve built a comprehensive TypeScript LangGraph agent with both short-term and long-term memory capabilities. By organizing our code into separate files with clear responsibilities, we’ve created a maintainable and extensible system that can be adapted for various use cases.

The file structure provides a clean separation of concerns:

- Memory components in

src/memory/ - Agent implementation in

src/agent.ts - Utility classes in

src/utils/ - Service implementations in

src/services/

This modular approach allows for easy expansion and integration with other systems. You can build upon this foundation to create more sophisticated agents for specific domains or use cases.