Creating modern command-line interfaces doesn’t have to mean sacrificing user experience or developer productivity. React Ink brings the familiar component-based architecture of React to terminal applications, enabling developers to build rich, interactive CLIs using TypeScript.

In this tutorial, we’ll build a fully-functional LLM chat application that demonstrates Ink’s powerful features:

- Real-time user input handling

- Component-based UI architecture

- Dynamic view switching

- Custom interactive elements

- Styled terminal output

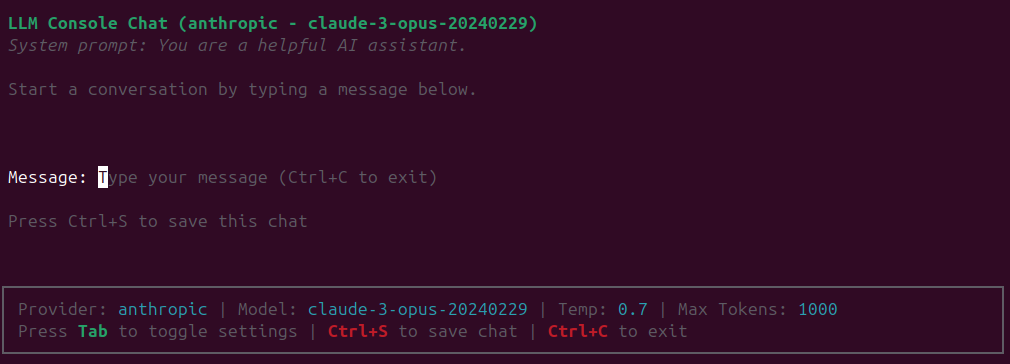

What We’re Building

We’ll create a text-based LLM console application using:

- React Ink for the terminal interface

- TypeScript for type safety

- Langchain for LLM integration

- Custom components for chat and settings interfaces

Prerequisites

Before we begin, ensure you have:

- Node.js 18 or later installed

- Basic familiarity with React and TypeScript

- A code editor (VS Code recommended)

Project Setup

Let’s start by creating a new TypeScript project and installing the necessary dependencies. Open your terminal and run:

mkdir llm-text-console

cd llm-text-console

npm init -y

Installing Dependencies

Install the required packages:

npm install ink @types/react react ink-text-input typescript @types/node

npm install --save-dev ts-node @types/ink @types/ink-text-input

TypeScript Configuration

Create a tsconfig.json file in your project root:

{

"compilerOptions": {

"target": "ES2022",

"module": "ESNext",

"moduleResolution": "node",

"jsx": "react",

"outDir": "dist",

"strict": true,

"esModuleInterop": true,

"skipLibCheck": true,

"forceConsistentCasingInFileNames": true

},

"include": ["src/**/*"],

"exclude": ["node_modules", "dist"]

}

Project Structure

Create the following directory structure:

mkdir -p src/components src/services src/types

Update your package.json to include the following scripts:

{

"type": "module",

"scripts": {

"build": "tsc",

"start": "node dist/index.js",

"dev": "ts-node --esm src/index.tsx"

}

}

Now our project is set up with:

- TypeScript configuration for React/Ink development

- Essential dependencies for building terminal UIs

- A modular project structure

- NPM scripts for development and building

Application Layout

Our LLM chat application follows a modular structure that separates concerns and makes the code maintainable. Here’s the complete project structure:

llm-text-console/

├── src/

│ ├── components/

│ │ ├── ChatInterface.tsx # Main chat view

│ │ ├── MessageInput.tsx # Text input component

│ │ ├── MessageList.tsx # Chat history display

│ │ ├── SettingsInterface.tsx # Settings view

│ │ └── StatusBar.tsx # Application status display

│ ├── services/

│ │ ├── llmService.ts # LLM integration logic

│ │ └── configService.ts # Settings management

│ ├── types/

│ │ ├── chat.ts # Chat-related interfaces

│ │ └── config.ts # Configuration types

│ └── index.tsx # Application entry point

├── package.json

└── tsconfig.json

Key Components

- Entry Point (index.tsx)

- Initializes the application

- Handles global keyboard shortcuts

- Manages view switching between chat and settings

- Chat Interface Components

ChatInterface: Main chat view containerMessageList: Displays chat history with syntax highlightingMessageInput: Handles user input with auto-completion

- Settings Interface

- Manages LLM configuration

- Provides model selection

- Controls temperature and other parameters

- Services Layer

llmService: Handles LLM communicationconfigService: Manages application settings

- Type Definitions

- Shared interfaces and types

- Configuration schemas

- Component props definitions

Creating our types file.

src/types/index.ts

export interface Message {

role: 'user' | 'assistant' | 'system';

content: string;

}

export interface LLMConfig {

provider: 'anthropic' | 'openai';

model: string;

apiKey?: string;

temperature: number;

maxTokens: number;

systemPrompt?: string;

saveDirectory?: string;

saveFormat?: 'json' | 'markdown';

}

export interface ChatState {

messages: Message[];

isLoading: boolean;

error: string | null;

}

Creating the Main App Component

The main App component serves as the root of our terminal application. Let’s examine how it manages state, handles user input, and renders different views:

Create the file src/index.tsx in the project:

#!/usr/bin/env node

import React, { useState } from 'react';

import { render, Box, Text, useInput } from 'ink';

import { LLMService, defaultConfig } from './services/llmService.js';

import { ChatInterface } from './components/ChatInterface.js';

import { SettingsInterface } from './components/SettingsInterface.js';

const App = () => {

const [llmService] = useState(() => new LLMService(defaultConfig));

const [view, setView] = useState<'chat' | 'settings'>('chat');

useInput((input, key) => {

if (key.ctrl && input === 'c') {

process.exit(0);

}

// Use Tab key for settings toggle (universally supported)

if (key.tab) {

setView(view === 'chat' ? 'settings' : 'chat');

}

});

return (

<Box flexDirection="column" height="100%">

{view === 'chat' ? (

<ChatInterface llmService={llmService} />

) : (

<SettingsInterface

llmService={llmService}

onSave={() => setView('chat')}

/>

)}

<Box flexDirection="column" marginTop={1} height={6}>

<Box borderStyle="single" borderColor="gray" paddingX={1} flexDirection="column">

<Box width={'100%'} justifyContent="space-between">

<Text color="gray" wrap="truncate">

Provider: <Text color="cyan">{llmService.getConfig().provider}</Text> |

Model: <Text color="cyan">{llmService.getConfig().model}</Text> |

Temp: <Text color="cyan">{llmService.getConfig().temperature}</Text> |

Max Tokens: <Text color="cyan">{llmService.getConfig().maxTokens}</Text>

</Text>

</Box>

<Box><Text> </Text></Box>

<Box>

<Text color="gray">

Press <Text color="green" bold>Tab</Text> to toggle settings | <Text color="red" bold>Ctrl+S</Text> to save chat | <Text color="red" bold>Ctrl+C</Text> to exit

</Text>

</Box>

</Box>

</Box>

</Box>

);

};

// Make sure we properly render and wait

const app = render(<App />);

await app.waitUntilExit();

Key Features

- State Management

- Uses React’s

useStatefor view switching and service initialization:

const [llmService] = useState(() => new LLMService(defaultConfig)); const [view, setView] = useState<'chat' | 'settings'>('chat');- Lazy initialization of LLM service ensures it’s only created once

- View state controls which interface is displayed

- Uses React’s

- Keyboard Input Handling

- Implements global shortcuts using Ink’s

useInputhook:

useInput((input, key) => { if (key.ctrl && input === 'c') { process.exit(0); } if (key.tab) { setView(view === 'chat' ? 'settings' : 'chat'); } });- Provides navigation with Tab key

- Ctrl+C to exit

- Implements global shortcuts using Ink’s

- Component Layout

- Uses Ink’s

Boxcomponent for flexbox-style layouts:

<Box flexDirection="column" height="100%"> {view === 'chat' ? ( <ChatInterface llmService={llmService} /> ) : ( <SettingsInterface llmService={llmService} onSave={() => setView('chat')} /> )} </Box>- Implements responsive terminal layouts

- Uses conditional rendering for view switching

- Uses Ink’s

- Status Bar Integration

<Box borderStyle="single" borderColor="gray" paddingX={1}><Text color="gray" wrap="truncate"> Provider: <Text color="cyan">{llmService.getConfig().provider}</Text> | Model: <Text color="cyan">{llmService.getConfig().model}</Text></Text></Box>- Displays configuration

- Set

Textcolor for better visibility - Implements truncation for smaller terminal windows

- Service Architecture

- Centralizes LLM configuration and state

- Provides consistent interface across components

- Enables easy configuration updates through settings

Building Interactive Components

Now that we have our application structure in place, let’s build the interactive components that make up our LLM chat interface. We’ll create reusable React components that handle user input, display messages, and manage settings. Each component demonstrates key Ink features like keyboard handling, styled output, and dynamic updates. We’ll start with the ChatInterface component, which serves as the main interaction point for our application.

Chat Interface

The ChatInterface component serves as our main interaction view, handling message display, user input, and chat history management. Let’s examine its key features:

Create the file src/components/ChatInterface.tsx in the project:

import React, { useState, useMemo, useCallback } from 'react';

import { Box, Text, useInput, useApp } from 'ink';

import { Message } from '../types/index.js';

import { LLMService } from '../services/llmService.js';

import { MessageInput } from './MessageInput.js';

import fs from 'fs';

import path from 'path';

interface ChatInterfaceProps {

llmService: LLMService;

}

export const ChatInterface: React.FC<ChatInterfaceProps> = ({ llmService }) => {

const [messages, setMessages] = useState<Message[]>([]);

const systemPrompt = llmService.getConfig().systemPrompt;

const [isLoading, setIsLoading] = useState(false);

const [saveStatus, setSaveStatus] = useState<string | null>(null);

const { exit } = useApp();

useInput((input, key) => {

if (key.ctrl && input === 'c') {

exit();

}

// Save chat with Ctrl+S

if (key.ctrl && input === 's') {

if (messages.length > 0) {

try {

const savedPath = llmService.saveChat(messages);

setSaveStatus(`Chat saved to: ${savedPath}`);

// Clear status after 3 seconds

setTimeout(() => {

setSaveStatus(null);

}, 3000);

} catch (error) {

setSaveStatus(`Error saving chat: ${error instanceof Error ? error.message : String(error)}`);

}

} else {

setSaveStatus('No messages to save');

// Clear status after 3 seconds

setTimeout(() => {

setSaveStatus(null);

}, 3000);

}

}

});

const handleSubmit = useCallback(async (value: string) => {

if (!value.trim()) return;

const userMessage: Message = { role: 'user', content: value };

setMessages(prev => [...prev, userMessage]);

setIsLoading(true);

try {

// Create message array with system prompt if available

const messagesWithSystem = [...messages, userMessage];

const response = await llmService.sendMessage(messagesWithSystem);

const assistantMessage: Message = { role: 'assistant', content: response };

setMessages(prev => [...prev, assistantMessage]);

} catch (error) {

console.error('Error getting response:', error);

} finally {

setIsLoading(false);

}

}, [messages, llmService]);

// Memoize the messages display to prevent re-rendering when typing

const messagesDisplay = useMemo(() => (

<Box flexDirection="column" flexGrow={1} marginBottom={1}>

{messages.length === 0 ? (

<Text color="gray">Start a conversation by typing a message below.</Text>

) : (

messages.map((msg, i) => (

<Box key={i} flexDirection="column" marginBottom={1}>

<Text bold color={msg.role === 'user' ? 'blue' : 'green'}>

{msg.role === 'user' ? 'You' : 'Assistant'}:

</Text>

<Text>{msg.content}</Text>

</Box>

))

)}

{isLoading && (

<Text color="yellow">Assistant is thinking...</Text>

)}

</Box>

), [messages, isLoading]);

// Memoize the header to prevent re-rendering when typing

const header = useMemo(() => (

<Box flexDirection="column" marginBottom={1}>

<Text bold color="green">

LLM Console Chat ({llmService.getConfig().provider} - {llmService.getConfig().model})

</Text>

{systemPrompt && (

<Text color="gray" italic>

System prompt: {systemPrompt}

</Text>

)}

</Box>

), [llmService, systemPrompt]);

return (

<Box flexDirection="column" padding={1} height="100%">

{header}

{messagesDisplay}

{saveStatus && (

<Box marginBottom={1}>

<Text color={saveStatus.includes('Error') ? 'red' : 'green'}>

{saveStatus}

</Text>

</Box>

)}

<MessageInput onSubmit={handleSubmit} />

<Box marginTop={1}>

<Text color="gray">Press Ctrl+S to save this chat</Text>

</Box>

</Box>

);

};

Key Features

- State Management

const [messages, setMessages] = useState<Message[]>([]);const [isLoading, setIsLoading] = useState(false);const [saveStatus, setSaveStatus] = useState<string | null>(null);- Tracks chat history

- Manages loading states during LLM responses

- Handles save operation feedback

- Message Handling

const handleSubmit = useCallback(async (value: string) => {if (!value.trim()) return;const userMessage: Message = { role: 'user', content: value };setMessages(prev => [...prev, userMessage]);setIsLoading(true);try {const response = await llmService.sendMessage(messagesWithSystem);const assistantMessage: Message = {role: 'assistant',content: response};setMessages(prev => [...prev, assistantMessage]);}finally { setIsLoading(false); }}, [messages, llmService]);- Processes user input

- Sends messages to LLM service

- Updates chat history

- Handles loading states

- Performance Optimization

const messagesDisplay = useMemo(() => (<Box flexDirection="column" flexGrow={1} marginBottom={1}> {messages.map((msg, i) => (<Box key={i} flexDirection="column" marginBottom={1}><Text bold color={msg.role === 'user' ? 'blue' : 'green'}> {msg.role === 'user' ? 'You' : 'Assistant'}: </Text><Text>{msg.content}</Text></Box>))}</Box> ), [messages, isLoading]);- Uses

useMemoto prevent unnecessary re-renders - Provides color-coded message display

- Handles empty state and loading indicators

- Uses

- Chat Persistence

useInput((input, key) => {if (key.ctrl && input === 's') {if (messages.length > 0) {try {const savedPath = llmService.saveChat(messages);setSaveStatus(`Chat saved to: ${savedPath}`);setTimeout(() => setSaveStatus(null), 3000);}catch (error) {setSaveStatus(`Error saving chat: ${error}`);}}}});- Implements chat saving functionality

- Provides feedback on save operations

- Handles error states

Message Input Component

The MessageInput component provides a clean, focused interface for user input with real-time updates and submission handling:

src/components/MessageInput.tsx:

import React, { useState } from 'react';

import { Box, Text } from 'ink';

import TextInput from 'ink-text-input';

interface MessageInputProps {

onSubmit: (message: string) => void;

}

export const MessageInput: React.FC<MessageInputProps> = ({ onSubmit }) => {

const [input, setInput] = useState('');

const handleSubmit = (value: string) => {

if (!value.trim()) return;

onSubmit(value);

setInput('');

};

return (

<Box>

<Text>Message: </Text>

<TextInput

value={input}

onChange={setInput}

onSubmit={handleSubmit}

placeholder="Type your message (Ctrl+C to exit)"

/>

</Box>

);

};

Key Features

- State Management

const [input, setInput] = useState('');- Maintains input field state

- Provides controlled component behavior

- Enables real-time input updates

- Input Validation

const handleSubmit = (value: string) => {if (!value.trim()) return;onSubmit(value);setInput('');};- Prevents empty message submission

- Trims whitespace automatically

- Clears input after successful submission

- Component Integration

<TextInput value={input} onChange={setInput} onSubmit={handleSubmit} placeholder="Type your message (Enter to send)" />- Uses Ink’s TextInput component

- Provides clear user instructions

- Handles Enter key for submission

- Layout Structure

<Box><Text>Message: </Text><TextInput /></Box>- Creates consistent layout

- Provides clear input labeling

- Maintains proper spacing

Settings Interface

The Settings Interface provides a form-like experience for configuring the LLM service, with keyboard navigation and real-time updates:

src/components/SettingsInterface.tsx:

import React, { useState, useEffect } from 'react';

import { Box, Text, useInput } from 'ink';

import TextInput from 'ink-text-input';

import { LLMService, availableModels } from '../services/llmService.js';

import { LLMConfig } from '../types/index.js';

import { SelectInput } from './SelectInput.js';

interface SettingsInterfaceProps {

llmService: LLMService;

onSave: () => void;

}

export const SettingsInterface: React.FC<SettingsInterfaceProps> = ({

llmService,

onSave

}) => {

const currentConfig = llmService.getConfig();

const [provider, setProvider] = useState<'anthropic' | 'openai'>(currentConfig.provider);

const [model, setModel] = useState<string>(currentConfig.model);

const [apiKey, setApiKey] = useState(currentConfig.apiKey || '');

const [temperature, setTemperature] = useState<string>(currentConfig.temperature.toString());

const [maxTokens, setMaxTokens] = useState<string>(currentConfig.maxTokens.toString());

const [systemPrompt, setSystemPrompt] = useState<string>(currentConfig.systemPrompt || 'You are a helpful AI assistant.');

const [saveFormat, setSaveFormat] = useState<'json' | 'markdown'>(currentConfig.saveFormat || 'json');

const [currentField, setCurrentField] = useState<'provider' | 'model' | 'apiKey' | 'temperature' | 'maxTokens' | 'systemPrompt' | 'saveFormat'>('provider');

// Update model when provider changes to ensure we use a valid model for the provider

useEffect(() => {

// If current model is not in the list for the selected provider, use the first one

if (!availableModels[provider].includes(model)) {

setModel(availableModels[provider][0]);

}

}, [provider]);

// Ensure saveFormat is properly initialized from config

useEffect(() => {

setSaveFormat(currentConfig.saveFormat || 'json');

}, []);

// Function to save settings

const saveSettings = () => {

// Get the current format directly from state

const format = saveFormat;

// Create a new config object

const newConfig: LLMConfig = {

provider: provider as 'anthropic' | 'openai',

model,

apiKey: apiKey || undefined,

temperature: parseFloat(temperature) || 0.7,

maxTokens: parseInt(maxTokens) || 1000,

systemPrompt: systemPrompt || undefined,

saveDirectory: llmService.getConfig().saveDirectory,

saveFormat: format

};

// Update the config with a completely new object to avoid reference issues

llmService.updateConfig(JSON.parse(JSON.stringify(newConfig)));

onSave();

};

// Main input handler for navigation

useInput((input, key) => {

// Use Tab or Enter for settings navigation (more reliable than F2)

if (key.tab || key.return) {

if (currentField === 'provider') {

setCurrentField('model');

} else if (currentField === 'model') {

setCurrentField('apiKey');

} else if (currentField === 'apiKey') {

setCurrentField('temperature');

} else if (currentField === 'temperature') {

setCurrentField('maxTokens');

} else if (currentField === 'maxTokens') {

setCurrentField('systemPrompt');

} else if (currentField === 'systemPrompt') {

setCurrentField('saveFormat');

} else {

// Save settings

saveSettings();

}

}

});

// Format-specific input handler

useInput((input, key) => {

if (currentField === 'saveFormat') {

if (key.upArrow || key.downArrow) {

// Toggle between json and markdown

const newFormat = saveFormat === 'json' ? 'markdown' : 'json';

setSaveFormat(newFormat);

} else if (key.return) {

// Save with current format

saveSettings();

}

}

});

return (

<Box flexDirection="column" padding={1}>

<Box marginBottom={1}>

<Text bold color="green">LLM Settings</Text>

</Box>

<Box flexDirection="column" marginBottom={2}>

<Box marginBottom={1} flexDirection="column">

<Text bold>Provider: </Text>

{currentField === 'provider' ? (

<SelectInput

items={['anthropic', 'openai']}

value={provider}

onChange={(value) => setProvider(value as 'anthropic' | 'openai')}

onSubmit={() => setCurrentField('model')}

/>

) : (

<Text>{provider}</Text>

)}

</Box>

<Box marginBottom={1} flexDirection="column">

<Text bold>Model: </Text>

{currentField === 'model' ? (

<SelectInput

items={availableModels[provider]}

value={model}

onChange={setModel}

onSubmit={() => setCurrentField('apiKey')}

/>

) : (

<Text>{model}</Text>

)}

</Box>

<Box marginBottom={1}>

<Text bold>API Key: </Text>

{currentField === 'apiKey' ? (

<TextInput

value={apiKey}

onChange={setApiKey}

placeholder="Enter API key (or leave blank to use env variable)"

onSubmit={() => setCurrentField('temperature')}

/>

) : (

<Text>{apiKey ? '********' : 'Using environment variable'}</Text>

)}

</Box>

<Box marginBottom={1}>

<Text bold>Temperature: </Text>

{currentField === 'temperature' ? (

<TextInput

value={temperature}

onChange={setTemperature}

placeholder="Enter temperature (0.0-1.0)"

onSubmit={() => setCurrentField('maxTokens')}

/>

) : (

<Text>{temperature}</Text>

)}

</Box>

<Box marginBottom={1}>

<Text bold>Max Tokens: </Text>

{currentField === 'maxTokens' ? (

<TextInput

value={maxTokens}

onChange={setMaxTokens}

placeholder="Enter max output tokens"

onSubmit={() => setCurrentField('systemPrompt')}

/>

) : (

<Text>{maxTokens}</Text>

)}

</Box>

<Box marginBottom={1}>

<Text bold>System Prompt: </Text>

{currentField === 'systemPrompt' ? (

<TextInput

value={systemPrompt}

onChange={setSystemPrompt}

placeholder="Enter system prompt"

onSubmit={() => setCurrentField('saveFormat')}

/>

) : (

<Text>{systemPrompt}</Text>

)}

</Box>

<Box marginBottom={1}>

<Text bold>Save Format: </Text>

{currentField === 'saveFormat' ? (

<Box flexDirection="column">

<Text>Current format: {saveFormat}</Text>

<Box marginY={1}>

<Text color={saveFormat === 'json' ? 'green' : undefined}>

{saveFormat === 'json' ? '› ' : ' '}

json {saveFormat === 'json' ? ' ✓' : ''}

</Text>

</Box>

<Box marginY={1}>

<Text color={saveFormat === 'markdown' ? 'green' : undefined}>

{saveFormat === 'markdown' ? '› ' : ' '}

markdown {saveFormat === 'markdown' ? ' ✓' : ''}

</Text>

</Box>

<Box marginTop={1}>

<Text color="gray">Use up/down arrows to select, Enter to save</Text>

</Box>

{/* No custom input handler here - moved to top level */}

</Box>

) : (

<Text>{saveFormat}</Text>

)}

</Box>

</Box>

<Text color="gray">

Press Tab or Enter to move to the next field. After completing all fields, press Tab or Enter to save.

</Text>

</Box>

);

};

Key Features

- Field Navigation

const [currentField, setCurrentField] = useState<'provider' | 'model' | 'apiKey' | 'temperature' | 'maxTokens' | 'systemPrompt' | 'saveFormat'>('provider');useInput((input, key) => { if (key.tab || key.return) {// Navigate through fields in sequenceif (currentField === 'provider') setCurrentField('model');else if (currentField === 'model') setCurrentField('apiKey');// ... continue through fields}});- Tab/Enter key navigation between fields

- Focused field highlighting

- Automatic progression through settings

- Dynamic Model Selection

useEffect(() => {if (!availableModels[provider].includes(model)) {setModel(availableModels[provider][0]);}}, [provider]);- Updates available models based on provider

- Maintains valid model selection

- Prevents invalid configurations

- Settings Persistence

const saveSettings = () => {const newConfig: LLMConfig = {provider, model, apiKey: apiKey || undefined,temperature: parseFloat(temperature) || 0.7,maxTokens: parseInt(maxTokens) || 1000,systemPrompt: systemPrompt || undefined,saveFormat};llmService.updateConfig(newConfig); onSave();};- Validates input values

- Updates service configuration

- Provides immediate feedback

- Interactive UI Elements

<Box flexDirection="column" padding={1}><Box marginBottom={1}><Text bold>Provider: </Text>{currentField === 'provider' ? ( <SelectInput items={['anthropic', 'openai']} value={provider} onChange={setProvider} /> ) : ( <Text>{provider}</Text> )}</Box></Box>- Conditional rendering of inputs

- Clear visual hierarchy

- Responsive layout design

Select Input

The SelectInput component provides a customizable dropdown-like interface for selecting options in the terminal. It handles keyboard navigation and selection with visual feedback:

import React, { useState, useEffect } from 'react';

import { Box, Text, useInput } from 'ink';

interface SelectInputProps {

items: string[];

value: string;

onChange: (value: string) => void;

onSubmit?: () => void;

}

export const SelectInput: React.FC<SelectInputProps> = ({

items,

value,

onChange,

onSubmit

}) => {

// Find the initial index, defaulting to 0 if not found

const initialIndex = Math.max(0, items.findIndex(item => item === value));

const [highlightedIndex, setHighlightedIndex] = useState(initialIndex);

// Update highlighted index when value changes externally

useEffect(() => {

const index = items.findIndex(item => item === value);

if (index !== -1) {

setHighlightedIndex(index);

}

}, [value, items]);

useInput((input, key) => {

if (key.upArrow) {

const newIndex = highlightedIndex > 0 ? highlightedIndex - 1 : items.length - 1;

setHighlightedIndex(newIndex);

} else if (key.downArrow) {

const newIndex = highlightedIndex < items.length - 1 ? highlightedIndex + 1 : 0;

setHighlightedIndex(newIndex);

} else if (key.return) {

const selectedValue = items[highlightedIndex];

// Update the parent component's state first

onChange(selectedValue);

// Pass the selected value directly to onSubmit to avoid state timing issues

if (onSubmit) {

// Store the selected value in a global variable to ensure it's available

(global as any).__lastSelectedValue = selectedValue;

onSubmit();

}

}

});

return (

<Box flexDirection="column">

{items.map((item, index) => (

<Box key={item}>

<Text color={index === highlightedIndex ? 'green' : undefined}>

{index === highlightedIndex ? '› ' : ' '}

{item}

{item === value ? ' ✓' : ''}

</Text>

</Box>

))}

<Box marginTop={1}>

<Text color="gray">Use arrow keys to navigate, Enter to select</Text>

</Box>

</Box>

);

};

Key Features

- State Management

const [highlightedIndex, setHighlightedIndex] = useState(initialIndex);- Tracks currently highlighted option

- Maintains selection state

- Syncs with external value changes

- Keyboard Navigation

useInput((input, key) => {if (key.upArrow) {const newIndex = highlightedIndex > 0 ? highlightedIndex - 1 : items.length - 1;setHighlightedIndex(newIndex);}});- Handles up/down arrow keys

- Implements circular navigation

- Provides immediate visual feedback

- Visual Indicators

<Text color={index === highlightedIndex ? 'green' : undefined}> {index === highlightedIndex ? '› ' : ' '} {item} {item === value ? ' ✓' : ''} </Text>- Shows current selection

- Highlights focused item

- Indicates selected value

- Accessibility

<Text color="gray">Use arrow keys to navigate, Enter to select</Text>- Provides clear usage instructions

- Shows available keyboard controls

- Maintains user guidance

LLM Service

The LLM Service handles all interactions with language models, providing a clean interface for managing configurations and processing messages. This service supports both Anthropic and OpenAI models through LangChain:

import { ChatAnthropic } from '@langchain/anthropic';

import { ChatOpenAI } from '@langchain/openai';

import { type BaseChatModel } from '@langchain/core/language_models/chat_models';

import { HumanMessage, AIMessage, SystemMessage } from '@langchain/core/messages';

import { LLMConfig, Message } from '../types/index.js';

import dotenv from 'dotenv';

import fs from 'fs';

import path from 'path';

dotenv.config();

export class LLMService {

private model: ChatAnthropic | ChatOpenAI;

private config: LLMConfig;

constructor(config: LLMConfig) {

this.config = config;

if (config.provider === 'anthropic') {

this.model = new ChatAnthropic({

apiKey: config.apiKey || process.env.ANTHROPIC_API_KEY,

modelName: config.model || 'claude-3-opus-20240229',

temperature: config.temperature,

maxTokens: config.maxTokens,

});

} else {

this.model = new ChatOpenAI({

apiKey: config.apiKey || process.env.OPENAI_API_KEY,

modelName: config.model || 'gpt-4-turbo',

temperature: config.temperature,

maxTokens: config.maxTokens,

});

}

}

private convertToLangChainMessages(messages: Message[]) {

return messages.map(msg => {

if (msg.role === 'user') {

return new HumanMessage(msg.content);

} else if (msg.role === 'assistant') {

return new AIMessage(msg.content);

} else {

return new SystemMessage(msg.content);

}

});

}

async sendMessage(messages: Message[]): Promise<string> {

try {

let langchainMessages = this.convertToLangChainMessages(messages);

// Add system prompt if configured and not already present

if (this.config.systemPrompt && !messages.some(msg => msg.role === 'system')) {

langchainMessages = [new SystemMessage(this.config.systemPrompt), ...langchainMessages];

}

const response = await this.model.invoke(langchainMessages);

return response.content.toString();

} catch (error) {

console.error('Error calling LLM:', error);

throw new Error(`Failed to get response from ${this.config.provider}: ${error instanceof Error ? error.message : String(error)}`);

}

}

getConfig(): LLMConfig {

return this.config;

}

updateConfig(newConfig: Partial<LLMConfig>): void {

// Create a completely new config object to avoid any reference issues

const updatedConfig = { ...this.config };

// Explicitly handle each field to ensure they're properly updated

if (newConfig.provider !== undefined) updatedConfig.provider = newConfig.provider;

if (newConfig.model !== undefined) updatedConfig.model = newConfig.model;

if (newConfig.apiKey !== undefined) updatedConfig.apiKey = newConfig.apiKey;

if (newConfig.temperature !== undefined) updatedConfig.temperature = newConfig.temperature;

if (newConfig.maxTokens !== undefined) updatedConfig.maxTokens = newConfig.maxTokens;

if (newConfig.systemPrompt !== undefined) updatedConfig.systemPrompt = newConfig.systemPrompt;

if (newConfig.saveDirectory !== undefined) updatedConfig.saveDirectory = newConfig.saveDirectory;

// Special handling for saveFormat to ensure it's properly updated

if (newConfig.saveFormat !== undefined) {

updatedConfig.saveFormat = newConfig.saveFormat;

}

// Assign the updated config - create a new object to ensure it's not the same reference

this.config = JSON.parse(JSON.stringify(updatedConfig));

// Recreate the model with the new configuration

if (this.config.provider === 'anthropic') {

this.model = new ChatAnthropic({

apiKey: this.config.apiKey || process.env.ANTHROPIC_API_KEY,

modelName: this.config.model || 'claude-3-opus-20240229',

temperature: this.config.temperature,

maxTokens: this.config.maxTokens,

});

} else {

this.model = new ChatOpenAI({

apiKey: this.config.apiKey || process.env.OPENAI_API_KEY,

modelName: this.config.model || 'gpt-4-turbo',

temperature: this.config.temperature,

maxTokens: this.config.maxTokens,

});

}

}

saveChat(messages: Message[], title?: string): string {

try {

// Create save directory if it doesn't exist

const saveDir = this.config.saveDirectory || process.env.CHAT_SAVE_DIRECTORY || './chats';

if (!fs.existsSync(saveDir)) {

fs.mkdirSync(saveDir, { recursive: true });

}

// Determine save format - use config value explicitly

const saveFormat = this.config.saveFormat;

// Ensure we have a valid format, defaulting to json if undefined

const formatToUse = saveFormat === 'markdown' ? 'markdown' : 'json';

// Generate filename based on date, optional title, and format

const timestamp = new Date().toISOString().replace(/[:.]/g, '-');

const fileExtension = formatToUse === 'markdown' ? 'md' : 'json';

const filename = title

? `${timestamp}-${title.replace(/[^a-z0-9]/gi, '-').toLowerCase()}.${fileExtension}`

: `${timestamp}-chat.${fileExtension}`;

const filePath = path.join(saveDir, filename);

if (formatToUse === 'markdown') {

// Format as markdown

const formattedDate = new Date().toLocaleString();

let markdownContent = `# Chat - ${formattedDate}\n\n`;

markdownContent += `**Model**: ${this.config.model} (${this.config.provider})\n\n`;

markdownContent += `---\n\n`;

// Add messages

messages.forEach(msg => {

if (msg.role === 'system') {

markdownContent += `## System\n\n${msg.content}\n\n---\n\n`;

} else if (msg.role === 'user') {

markdownContent += `## User\n\n${msg.content}\n\n---\n\n`;

} else if (msg.role === 'assistant') {

markdownContent += `## Assistant\n\n${msg.content}\n\n---\n\n`;

}

});

// Write to file

fs.writeFileSync(filePath, markdownContent);

} else {

// Format as JSON (default)

const chatData = {

timestamp: new Date().toISOString(),

model: this.config.model,

provider: this.config.provider,

messages: messages

};

// Write to file

fs.writeFileSync(filePath, JSON.stringify(chatData, null, 2));

}

return filePath;

} catch (error) {

console.error('Error saving chat:', error);

throw new Error(`Failed to save chat: ${error instanceof Error ? error.message : String(error)}`);

}

}

}

// Available models by provider

export const availableModels = {

anthropic: [

'claude-3-7-sonnet-20250219',

'claude-3-5-haiku-20241022',

'claude-3-5-sonnet-20241022',

'claude-3-5-sonnet-20240620',

'claude-3-opus-20240229',

'claude-3-sonnet-20240229',

'claude-3-haiku-20240307'

],

openai: [

'gpt-4-turbo',

'gpt-4o',

'gpt-4',

'gpt-3.5-turbo'

]

};

// Default configuration

export const defaultConfig: LLMConfig = {

provider: 'anthropic',

model: 'claude-3-opus-20240229',

temperature: 0.7,

maxTokens: 1000,

systemPrompt: 'You are a helpful AI assistant.',

saveDirectory: process.env.CHAT_SAVE_DIRECTORY || './chats',

saveFormat: process.env.CHAT_SAVE_FORMAT === 'markdown' ? 'markdown' : 'json',

};

Key Features

- Model Management

if (config.provider === 'anthropic') {this.model = new ChatAnthropic({apiKey: config.apiKey || process.env.ANTHROPIC_API_KEY,modelName: config.model || 'claude-3-opus-20240229',temperature: config.temperature,maxTokens: config.maxTokens,});} - Message Type Conversion

private convertToLangChainMessages(messages: Message[]) {return messages.map(msg => {if (msg.role === 'user') {return new HumanMessage(msg.content);}else if (msg.role === 'assistant') {return new AIMessage(msg.content);} else {return new SystemMessage(msg.content);}});}- Converts application messages to LangChain format

- Handles all message types (user, assistant, system)

- Maintains message role distinction

- Message Processing

async sendMessage(messages: Message[]): Promise<string> {try {let langchainMessages = this.convertToLangChainMessages(messages);// Add system prompt if configured and not already presentif (this.config.systemPrompt && !messages.some(msg => msg.role === 'system')) {langchainMessages = [new SystemMessage(this.config.systemPrompt), ...langchainMessages];}const response = await this.model.invoke(langchainMessages);return response.content.toString();}catch (error) {throw new Error(`Failed to get response: ${error}`);}}- Handles message conversion

- Manages system prompts

- Provides error handling

- Configuration Management

updateConfig(newConfig: Partial<LLMConfig>): void {const updatedConfig = { ...this.config };if (newConfig.provider !== undefined) updatedConfig.provider = newConfig.provider;if (newConfig.model !== undefined) updatedConfig.model = newConfig.model;// ... handle other config updatesthis.config = JSON.parse(JSON.stringify(updatedConfig));// Recreate model with new configuration...}- Handles partial configuration updates

- Maintains configuration state

- Reinitializes models as needed

- Message History Management

saveChat(messages: Message[]): string {const timestamp = new Date().toISOString().replace(/[:.]/g, '-');const format = this.config.saveFormat || 'json';const filename = `${timestamp}-chat.${format}`;// ... save chat history return filePath;}- Supports multiple export formats

- Generates timestamped files

- Maintains chat history

Key Takeaways

- Ink provides React-like components for building CLIs

- Use

useInputhook to handle keyboard interactions - Leverage React patterns like conditional rendering and state management

- Create reusable components for common UI patterns

- Style with Ink’s built-in components like

BoxandText

The complete example shows how to build a fully interactive terminal application with navigation, input handling, and dynamic UI updates.